Machine Learning Deployments in 2023

Deployment of applications and infrastructure is a complex process and requires coordination from Infra and application developer teams. An internal developer platform helps both teams move fast with fewer mistakes — resulting in increased productivity with a higher amount of stability.

Key things to take care of in Deployment and Software Lifecycle

- Dockerization — Packaging dependencies with version pinning is important for reproducibility. Docker images with uneeded dependencies can make the docker images bloated, leading to higher startup times.

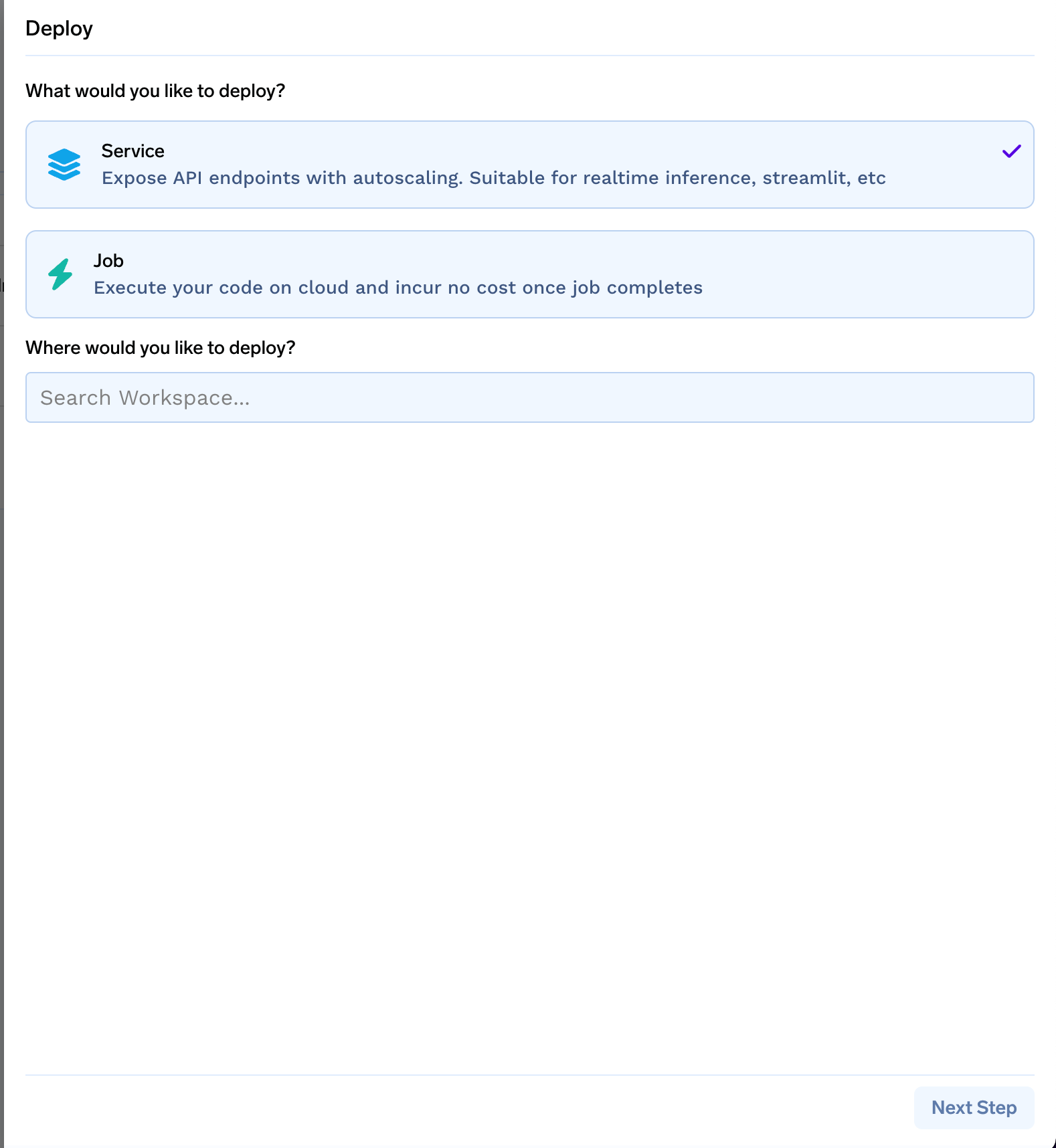

- Choice of mode of deployment: There are a lot of options for deployment — webservices, jobs, serverless methods, static website — each of them have their own pros and cons and can be the best method based on the traffic patterns, latency requirements, memory and cpu requirments.

- Scalability — Scale brings with it a lot of problems and requires proper configuration of scaling policies, configuring autoscalers, making sure our services are stateless — while at the same time optimizing costs.

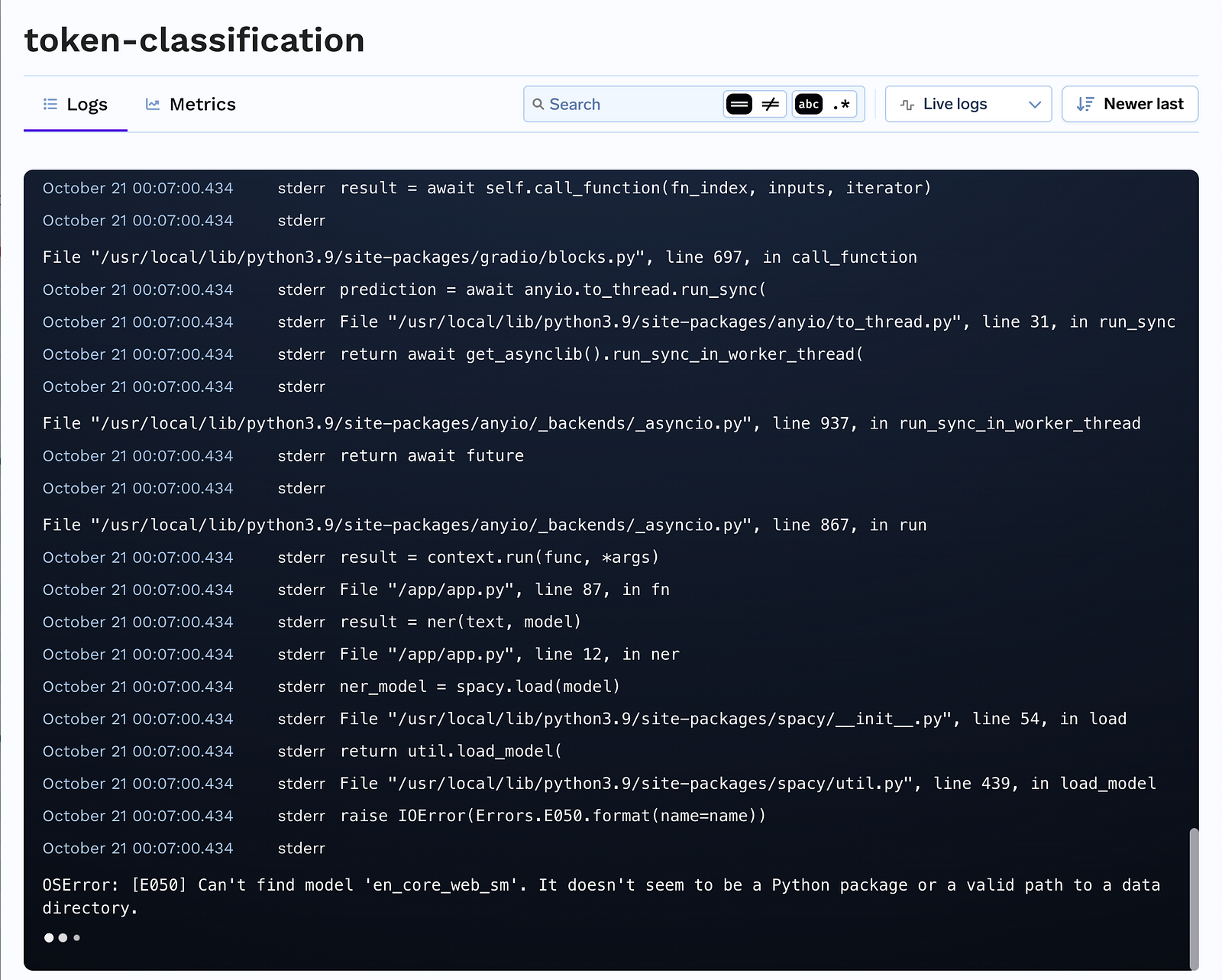

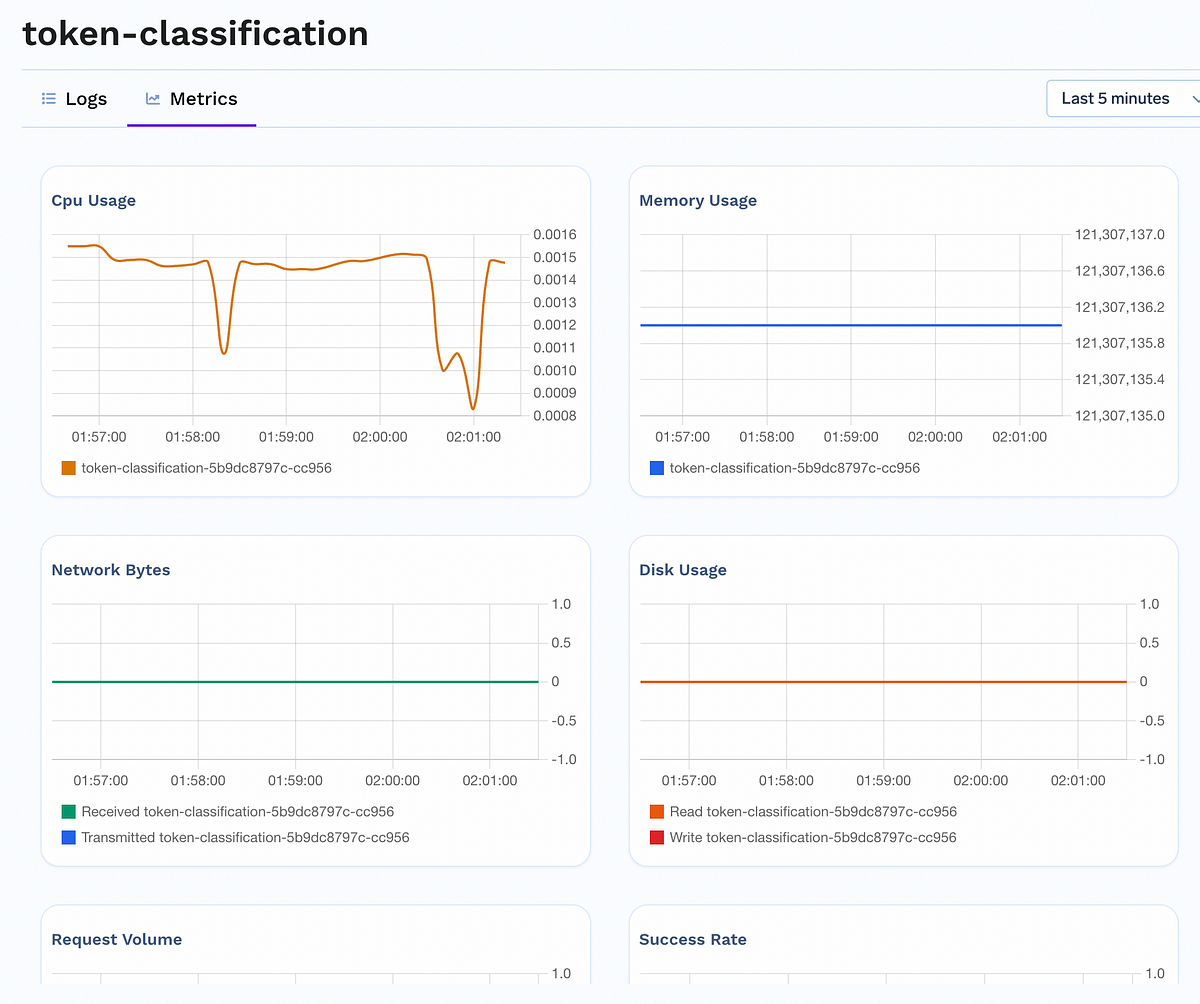

- Monitoring and Debuggability — How to compare different versions of software? Observing logs, metrics, Logging custom metrics and observing them on dashboards should be very easy.

- Secrets Management and Security — There needs to be support for proper secret management as well as providing access to other systems using service accounts rather than circulating credentials.

- Automation and CI/CD — Having a good CI/CD pipeline is crucial for fast development and catching of bugs. Being able to create new environments seamlessly in one click. Being able to move from develop to staging to production in an automated way makes the process much smoother.

- Versioning, Immutable Deployments and rollbacks — It should be easy to rollback deployments along with the secret values. In all current systems today, it almost becomes impossible to rollback a deployment with the last set of environment variables or secret values.

- Multiple Environments: Being able to manage multiple environments or spin up/down dynamic environments helpes developers test in a more isolated way and makes overall progress much faster with lower cost.

- API Security and authentication — Authentication of APIs both exterrnally and internally — simple username-password authentication, OIDC authentication, service account, bearer token?

- Rate Limiting and load balancer configuration — General good practices to be incorporated for every api (Load Balancer configuration)

- Speed and autonomy of deployment — Developers should feel in control over the deployments rather than being abstracted away from it. Keeping them in the loop and making them autonomous while making sure the core infra doesn’t break due to human mistakes is one of the core goals of the platform.

- Cost optimization — Choosing the best hardware and best type of deployment help in optimizing this.

- Coordination of Infra and Application Changes: While moving from one environment to other, often infra changes need to be rolled out as well — which if missed will lead to application breaking in production.

- AB Testing / Traffic Shaping

Introducing TrueFoundry?

A deployment platform that can serve as the internal developer platform for a company. The goal here is to make it really easy for developers to deploy and maintain their applications without detailed knowledge of infrastructure and also making it easy for infra / devops teams to create policies to enforce engineering and security best practices.

What are the key design principles behind TrueFoundry platform?

- Fully API driven architecture — TrueFoundry follows a fully API driven architecture very similar to Kubernetes. This allows users to build interfaces, UIs and automations on top of it.

- Extensible PAAS on top of Kubernetes — can be customized with multiple plugins to the core things allowed by Kubernetes.

- Gitops driven (supports a non Gitops mode too)

- Fine grained access control from day 1

- Infrastructure as Code (Terraform) — can be spun up on any cloud account in no time.

- Security by default — Truefoundry follows the POLP principle (Principle of least privilege) and uses security best practices like using service accounts, TLS, data encryption at rest, support for air-gapped environments.

- Cloud Native by design — We consciously make a choice of components that are cloud native by design and are available in all cloud providers.

Key functionalities supported in TrueFoundry

Different methods of Deployment across clouds:

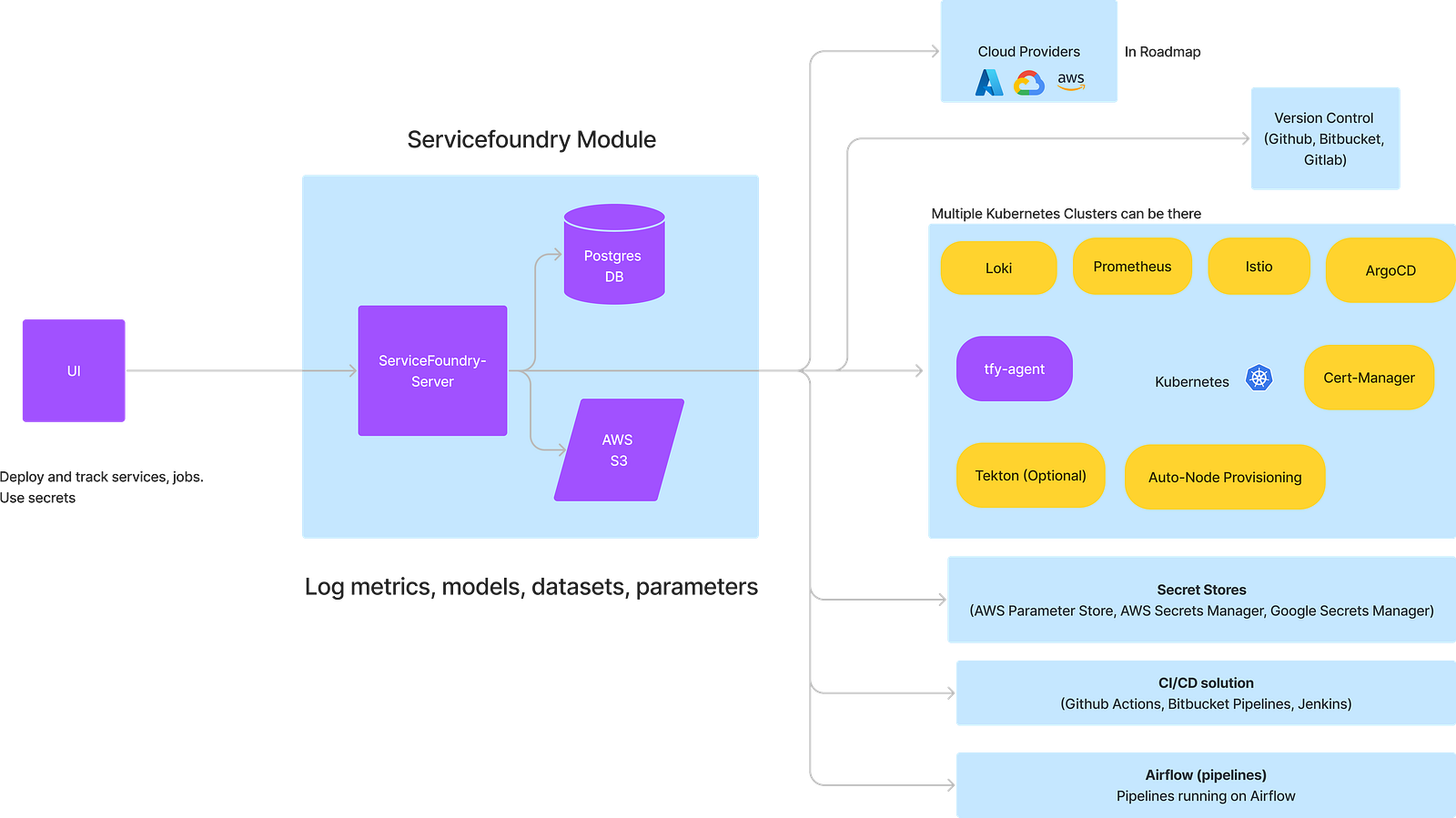

Different workloads can be suitable for different methods of deployment based on the traffic pattern, cpu and memory consumption. The goal is to provide a single interface for deploying across multiple systems — like service on kubernetes, jobs on Kubernetes, AWS Lambda, serverless on Kubernetes (Knative), Google Cloud Run, model servers. Truefoundry integrates with git repositories to allow deployment directly from any Git repo.

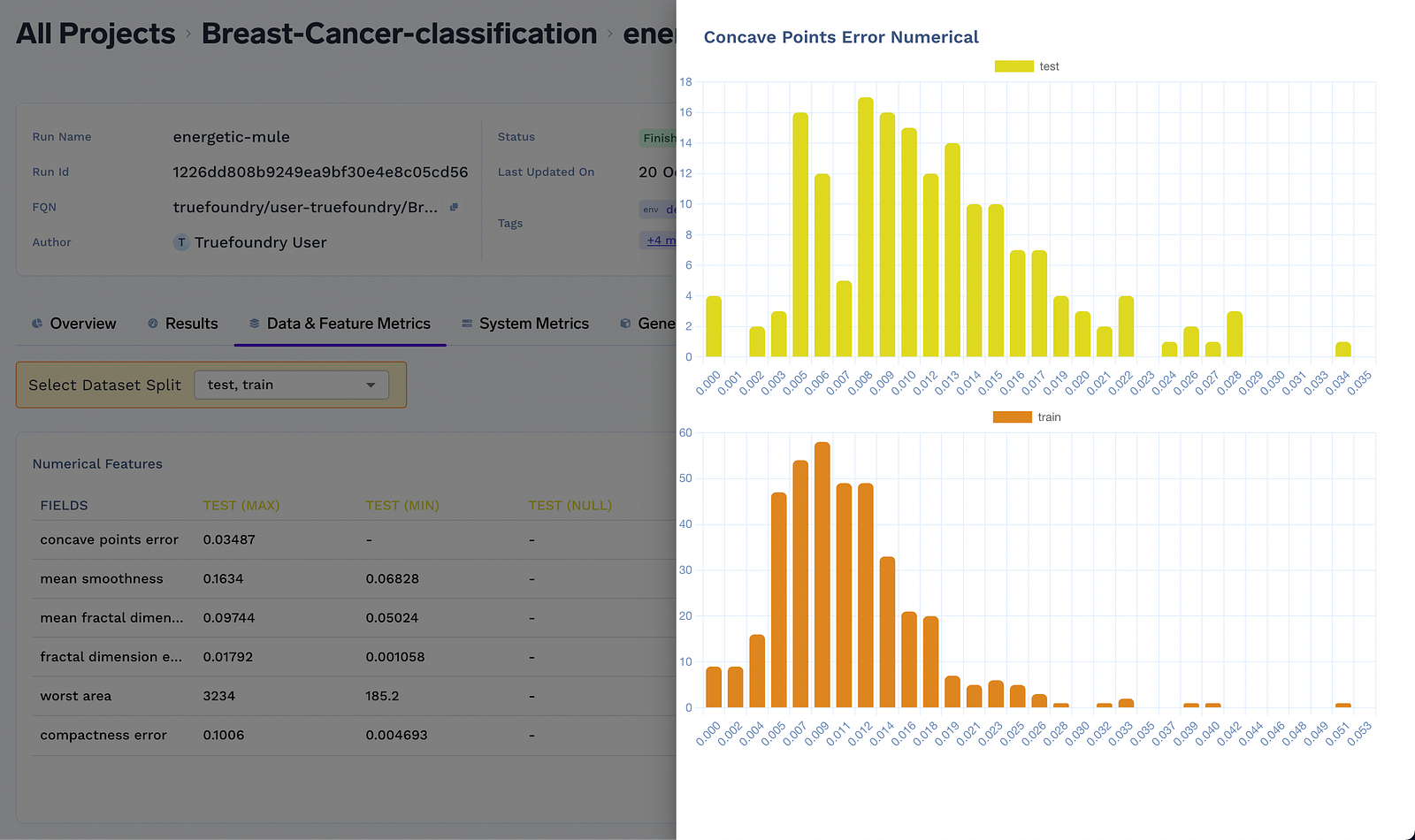

Logging and Visualizing metrics and Graphs across jobs

TrueFoundry allows developers to log details metrics, parameters, images, plots, artifacts which allows them to compare job runs using the detailed dashboards.

Software Canary / Shadow Testing

TrueFoundry makes it really easy to test new versions of the software on live data without hurting existing production — by shadow testing. It also allows canary rollout of software by gradually shifting traffic to the new versions.

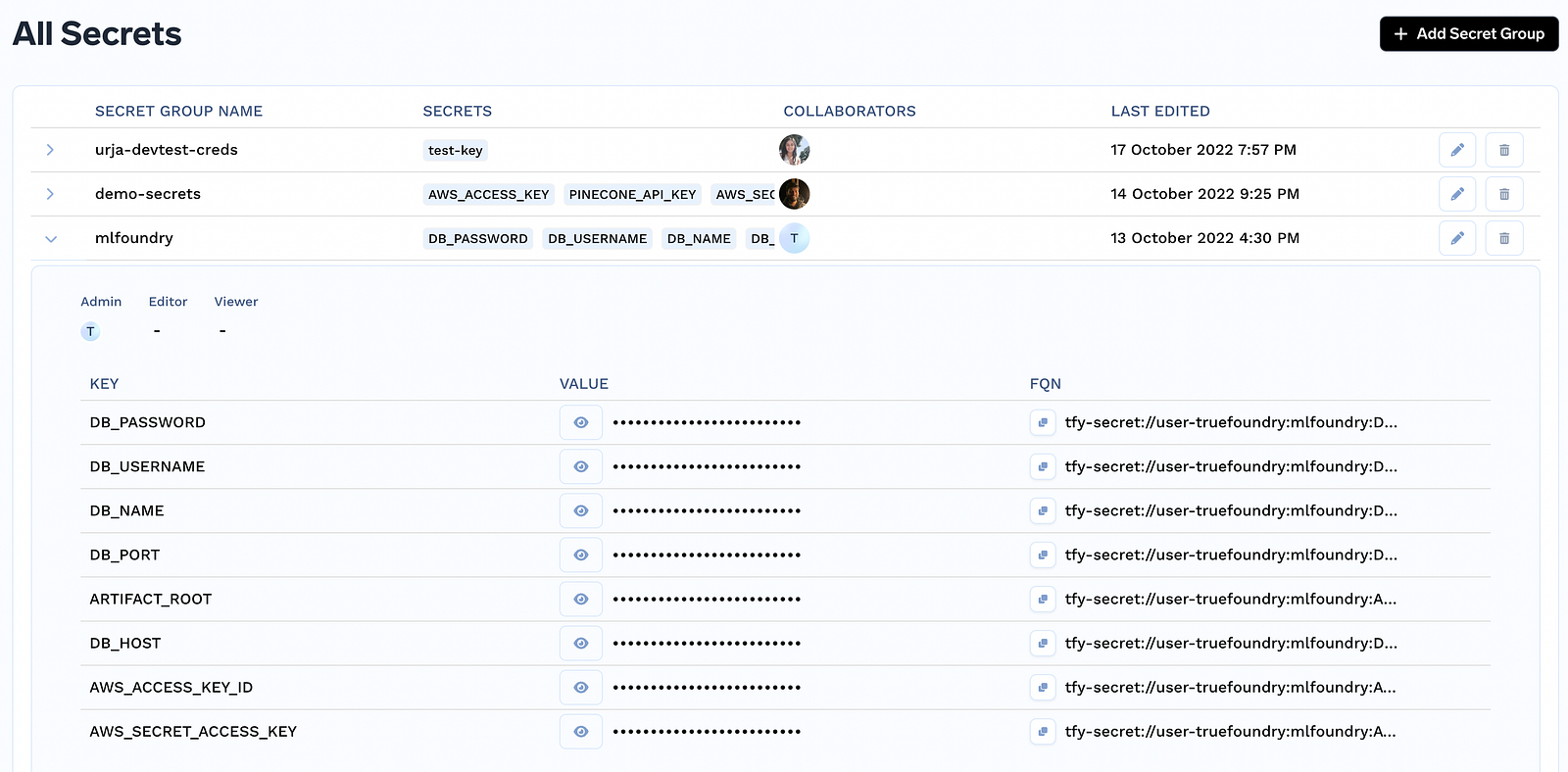

Secrets Management

Developers will be able to create secrets and reference them in their applications. Some of the key features of secrets management:

- The secret storage is backed by secretstores like Vault, AWS Secrets Manager, Google Secrets Manager, AWS Parameter Store, etc. None of the actual secret values are stored on the platform itself.

- The platform maintains a track of which secret is used in which application — so that whenever a secret is changed, it can prompt users to redeploy the corresponding services.

- The platform maintains secret versions which allow rollback of applications along with the corresponding secret versions.

Access control on secrets — Viewers can see the keys of secrets but not the values of the secrets. This way devops / infra team can share the keys with the developers without worrying about exposing the values of secrets.

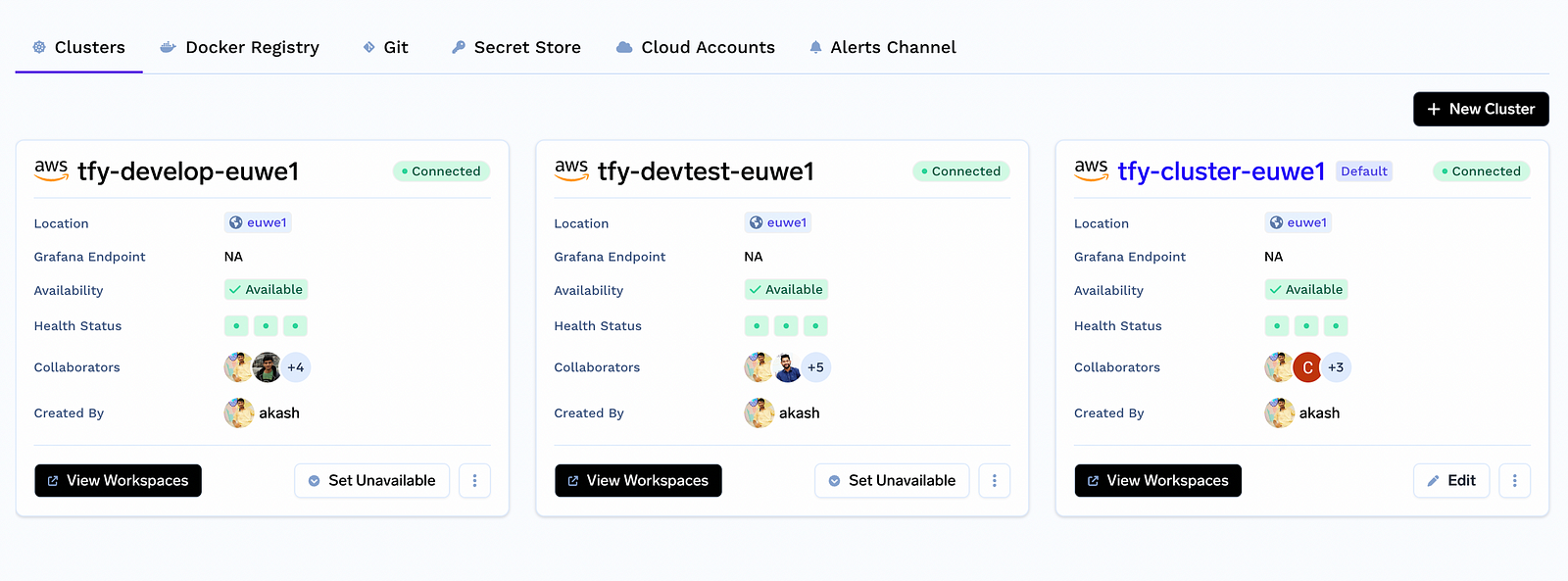

Multi-Cluster Management and Deployment

Truefoundry allows integration with multiple clusters and managing access control across them. Truefoundry doesn’t store the cluster credentials anywhere with itself, making it secure for different users in the company.

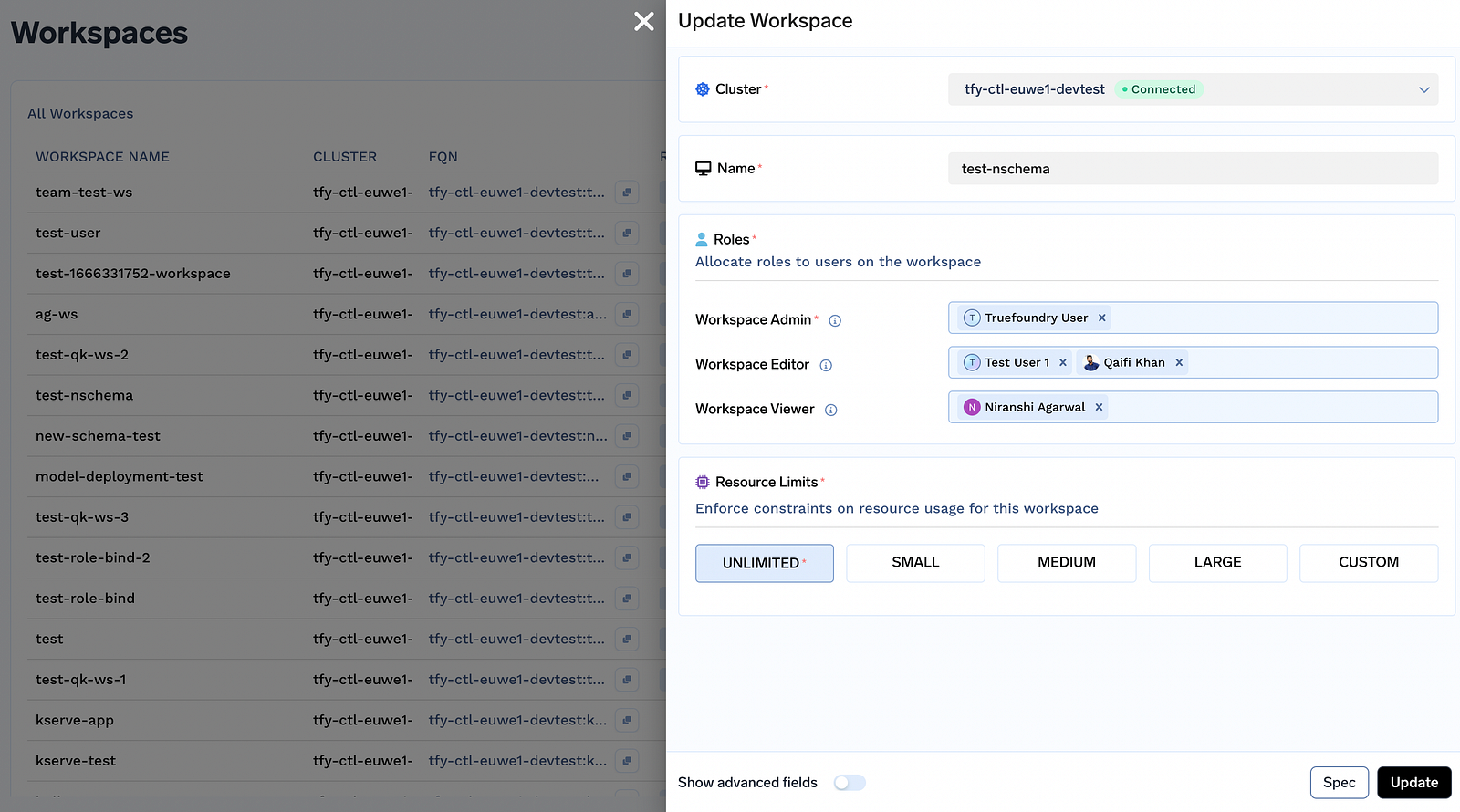

Access control on Kubernetes for different teams

Truefoundry makes it easy to put access control on Kubernetes namespaces and allocate them to different teams with resource quotas which enables developers to be more autonomous while ensuring that the infra remains stable, cost-controlled and secure.

Basic Monitoring and Logging UIs directly viewable on the platform

TrueFoundry doesn’t come up with any opinions on the logging or metrics platform that you want to use. The goal here instead is to integrate with a few common logging and monitoring solutions so that we can show a quick glance on the platform itself. For more detailed and customizable views, we do recommend using the existing monitoring solutions like Prometheus, Loki, ELK, Datadog or NewRelic.

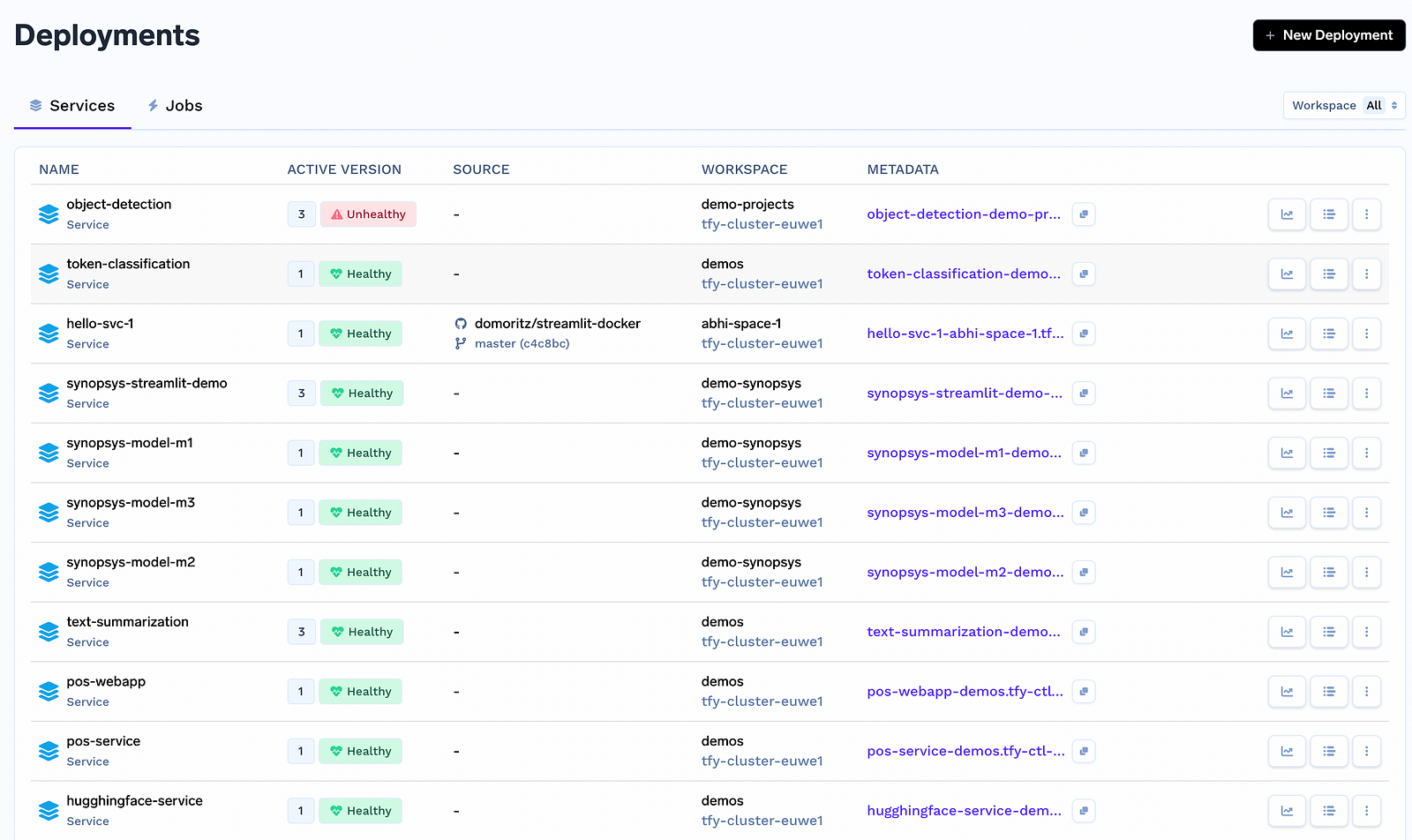

Unified view of all services deployed and running (Service Catalog)

Cost estimation and optimization on a per service level / team / engineer level.

We want to enable to track cost estimation and optimization on a per service or team level. Just exposing the visibility of cost to every single engineer creates the awareness to optimize the costs and remove the burden from a single team.

Deploy any terraform module (hence enabling deployment of any managed database, queue or caching services)

We want to enable deployment of Cloud services using terraform modules written by devops or Infra team. For e.g. the devops team can create a terraform module for creating a database and developers can provision a database from the UI based on the terraform module for RDS. This will rely on the plugin framework mentioned below and a terraform controller on Kubernetes.

Will TrueFoundry work with my existing stack?

At TrueFoundry we don’t want to reinvent the wheel and use existing systems as much as possible. Thats why we integrate with a lot of existing infrastructure tools so that users can bring their own infrastructure or customize Truefoundry according to their own needs.

- Cloud accounts (AWS, GCP, Azure) — this helps us provision clusters automatically, create Lambda, deploy managed services.

- Git integration (Github, Bitbucket, Azure) — this helps developers deploy their application directly from their Git repositories.

- Secret Stores (AWS Secrets Manager, Google Secrets Manager, Hashicorp Vault) — this way the actual values of the secrets will be stores on the secret stores and not on the platform.

- Docker Registry — Dockerhub, Google GCR, Amazon ECR, Github Docker registry

- Terraform integration

- Monitoring Integrations: Promethues Grafana, Datadog, NewRelic

- Logging Infrastructure Integrations — Loki, Datadog, ELK stack.

- CI/CD Integration — Truefoundry can integrate with existing CI/CD pipelines like Github Actions, Jenkins, AWS Codebuild, Google Codebuild, etc.

If you don’t see any name here on the list, we will be happy to work with you and add more integrations to the list.

How does TrueFoundry enhance security?

POLP Principle

We follow and make it easy for infra teams to enforce POLP principle throughout the stack. Developers can be added to whatever workspaces they need access to — and their changes will be constrained to those workspaces.

No Secrets, passwords stored on Truefoundry platform

Truefoundry doesn’t store any sensitive information with itself like Kubernetes credentials, secrets. All data is encrypted at REST. We recommend providing all access through service accounts

We also plan to provide complete audit logs which helps teams understand all the actions taken across platforms.

How is TrueFoundry open and customizable?

Kubernetes Cluster is in your control

TrueFoundry exposes the raw Kubernetes cluster that it deploys workloads on and provides full access (including kubectl with proper permission access) to customers. This allows anyone to pretty much do whatever they want in addition to Truefoundry without ever blocking them.

Apart from this, we also support a plugin framework using which its easy to build further plugins — similar to CRDs on Kubernetes.

Building Custom Plugins on TrueFoundry

TrueFoundry supports each deployment methodology as a custom plugin:

To create a plugin, user has to write 3 parts:

- An input cue file defining the spec of the input schema

- Processor which defines how to convert the input spec to an output spec

- Output spec that will be sent to Kubernetes.

Currently the input spec and output spec need to be in Cue Lang and the processor needs to be in Go. However in the future, we can allow input and output schemas also possible in JSON Schema and the processors to be written in any language.

We auto-generate the UI and the python interfaces from the input cue files.

How to onboard onto TrueFoundry?

Setting up of infrastructure by infra/devops teams:

- Create Kubernetes clusters using terraform. Truefoundry provides sample terraform code that can spin up clusters in 1 click while committing the terraform code to a github repo. Devops / Infra teams can customize the terraform code according to their choice and reapply the terraform.

- Create service accounts via terraform to provide access to other infrastructure from Kubernetes namespaces.

- Add the service accounts to workspaces on truefoundry platform and allot workspaces to different teams.

- Truefoundry bootstraps the Kubernetes cluster with a default monitoring stack (Kube-prometheus-loki-grafana stack), Istio (ingress) and ArgoCD (infra deployment). Truefoundry will dump the manifest files to a Github repository which can then be changed according to requirements by infra team.

- For deploying managed infrastructure, infra teams can provide the terraform modules for DB, queue, cache, etc. Developers can then provision this infra using the modules provided by Infra team.

- Onboard developers on to the platform.

- Assign workspaces to teams / applications.

- Developers can then start deploying in no time following the docs.

How does TrueFoundry work?

TrueFoundry has 2 components — control plane and a workload cluster agent. This enables TrueFoundry to orchestrate workloads across multiple clusters.

Differentiation with Other Platforms

Architecture

- Multi-Cluster from Day 1: Truefoundry support multi-cluster support from Day 1 where clusters can live across regions, VPCs and can be private / public. This will be very difficult in the current architecture of Porter and Northflank. Komodor’s architecture can do this, but they are focused more on Kubernetes visualization and monitoring which comes by default to our platform.

- Multi-Cloud from Day 1: This will be true for Porter, Northflank, Qovery but only on the Kubernetes layer. Our architecture supports building integrations with a lot more cloud native components because of the openness of the architecture. Argonaut does this on the same broader layer as us.

- Complete transparency and Gitops — We follow a full transparency model where in user has full control over his architecture and not blocked by Truefoundry at any point. In case of Porter, Northflank, and Qovery — bigger companies will outgrow the set of functionalities because of the closed architecture. We also allow a both Gitops and non-gitops mode which no one does yet — Porter, Norhtflank, Qovery are non Gitops push based whereas CoSphere is fully Git based.

Application and Infrastructure Uniform deployment

We very deeply integrate into application, jobs, ML deployment. This translates a lot of infra knowledge to developer terminology. A lot of the other platforms do this by building abstractions and reducing the infra concepts — whereas we try to do by simplification and not hiding things.

Argonaut adopts this approach of bringing both infra and application deployment together — but this will be a 2–3 year product cycle. They have started with more on the infra side.

Plugins

Our plugin based architecture is based on the Kubernetes CRD framework with our own flavour of CueLang. This gives us the power to get plugins out on CLI, UI and all languages really fast. This was inspired by the design at Kubevela (an open source repo) — we don’t know of any other player that has figured out this pattern. This is one of our proprietory things that will also allow us to build much faster than other players.

Transparency

We try to be as transparent as possible by giving complete source code out — which makes it really easy to migrate off of us. Porter and purple.sh only seems to gaurantee this — rest all are very closed systems.

Insights

We are already showing unique insights and preventing the most common bugs in software deployment like not changing environment variables on deployment. We don’t know of any other platform that is doing this or can do this as we can because of us knowing both secret history and deployment history. We can also generate a lot of insights on cost, stability, developer producitvity since we see the pipeline of code from source control to production.

Platform team substitute

Our platform is designed to be more like what platform teams build internally. It addresses the key issues of resource allocation, security and abstractions for developers. Porter, Northflank are more similar to Heroku. Qovery, Argonaut is more like a substitute for Devops for smaller companies. Humanitec, shipa are more designed for platform teams but we found their abstractions quite hard to reason about and understand.