Is AI Workload Bloating Up Your Cloud Bill?

We firmly believe that every company will be a Machine Learning (ML) company in the coming few years. As organizations embrace ML, one of the significant challenges they face is managing the associated cloud costs. Running AI/ML workloads in the cloud can quickly become expensive, but with careful planning and optimization, it is possible to reduce these costs significantly.

In this blog post, we will explore several strategies to help you optimize your AI infrastructure, ultimately reducing your cloud expenses without compromising performance or scalability. Below are the broad categories to consider:

- Measure and Attribute

- Reduce compute costs by using spot / reserved instances

- Choose the right deployment architecture

- Implement autoscaling

- Compute and data colocation

- Move to hosted auto-stopping notebooks instead of providing a dedicate VM for every developer

- Use checkpointing where possible to resume in long-running training jobs.

- Efficient GPU utilization

Measure and Attribute

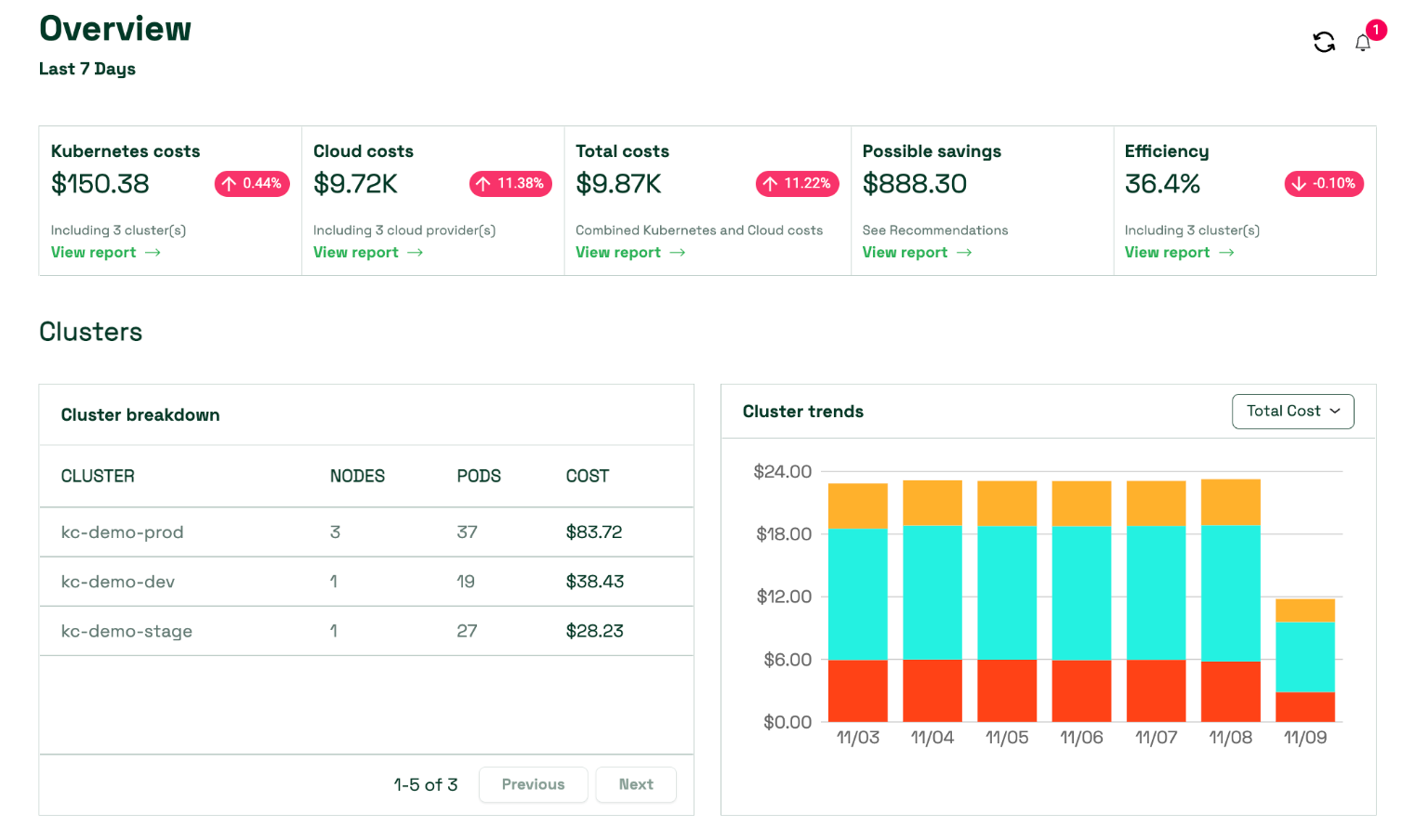

After having worked with quite a few organizations and from our own previous experience, a large part of the cost is just from human mistakes of forgetting to turn off VMs, services or incorrect architecture design incurring more costs. Having full visibility of who owns what and what cost is incurred on a team /project basis helps point out cost drainage faster and lets everyone be accountable for their own projects.

You can't improve what you don't measure

The very first step in optimising ML workload is to start measuring and accounting for attribution. Below are some of the initiatives that you can undertake:

- Track costs on each microservice, project, or team level.

- Provide cost visibility to all developers and ensure they are empowered to understand and reduce costs.

- Set up alerts on cloud costs.

- Use tools like Infracost.io to measure the cost of the infra before provisioning if you are using Terraform.

Reduce Compute Cost

ML Workloads incur huge compute costs, mainly because they require high compute resources - either high CPU requirements or GPUs, both of which turn out to be very expensive. Below are some of the steps you can take to reduce the compute cost:

- Model Distillation and Pruning to reduce your model's resource requirements

- Use Reserved and Spot instances to reduce the cost. EC2 Spot Instances, according to Amazon, can potentially save you up to 90% of what you’d otherwise spend on On-Demand Instances.

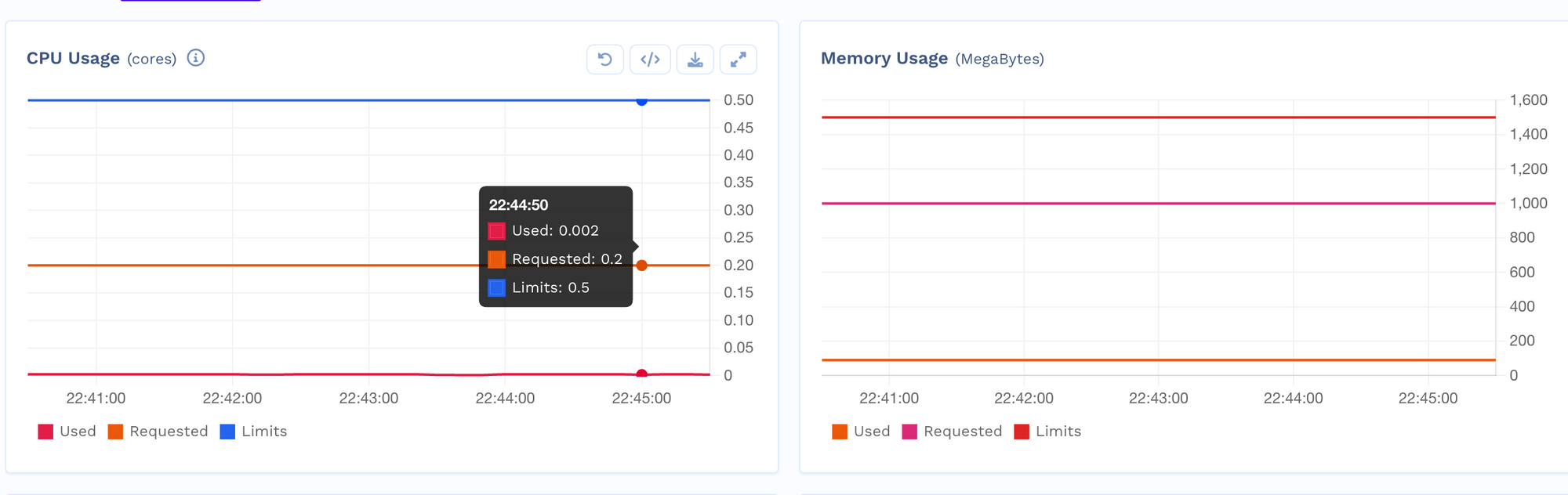

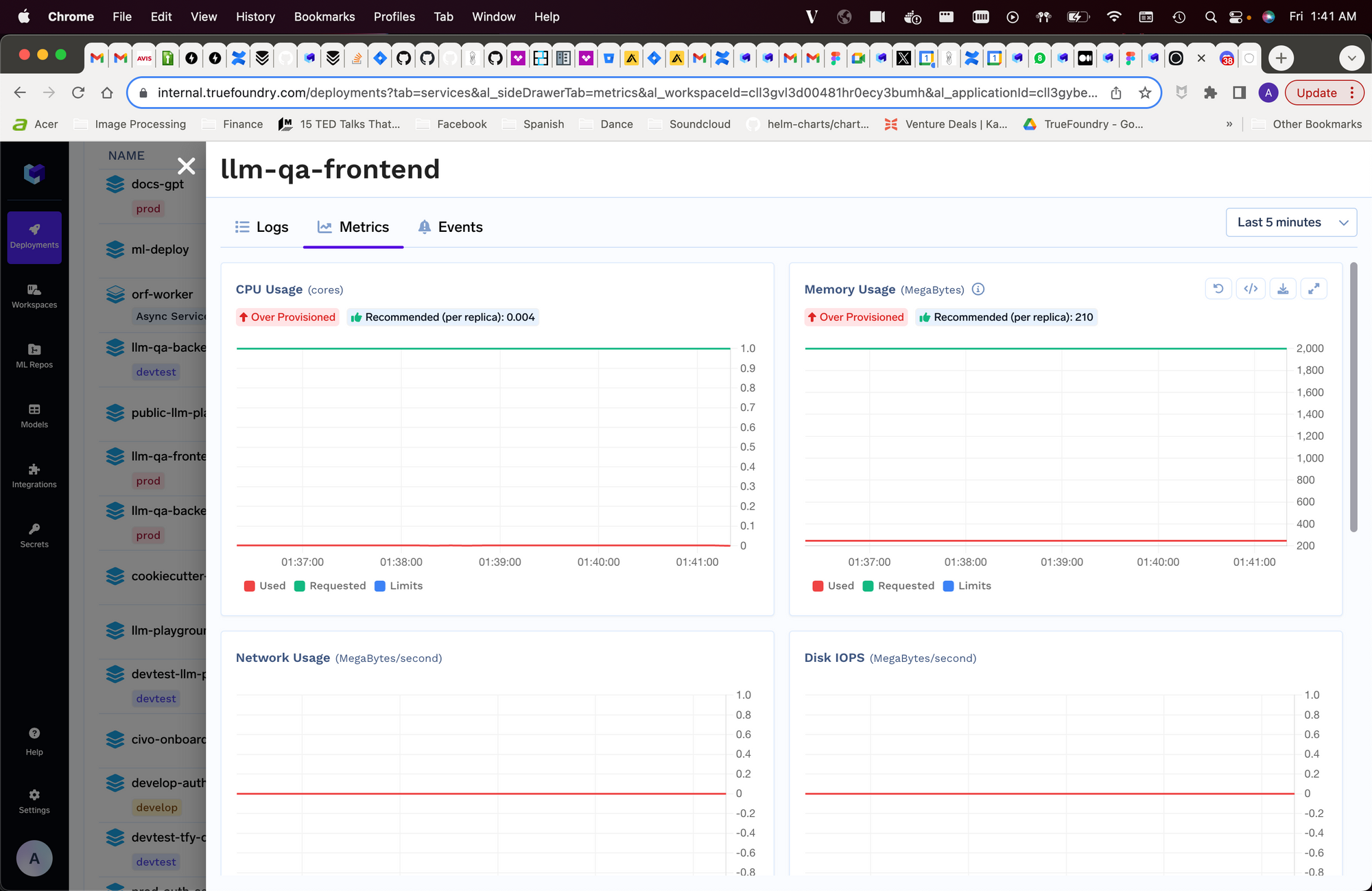

- Choose the right resource configuration: We have often found ML teams overprovision the resources for ML services and training jobs. This can either mean choosing the wrong instance type if you are working with VMs or choosing the wrong CPU and memory resource requirements. It's important to get visibility into the requested and used CPU/memory to be able to tune the values for optimal cost.

When you launch a Spot Instance, you specify a maximum price that you are willing to pay per hour. If the Spot price for the instance type and Availability Zone that you request is less than your maximum price, your instance will be launched. However, if the Spot price for that instance type and Availability Zone increases above your maximum price, your instance may be terminated with two minutes' notice.

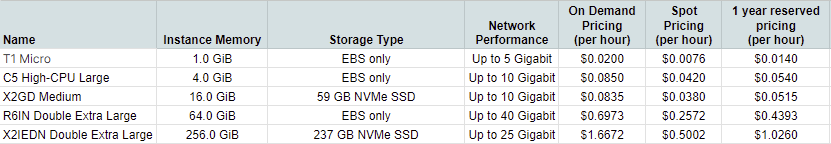

We did a comparative study US East (N. Virginia) and found that:

- Spot instances were available for a 44%-62% cheaper rate.

- 1-year reserved instances are available for 14% - 37% cheaper rate.

Choose the optimal deployment architecture

Different usecases in ML require different architectures, and choosing the wrong design here can lead to massive differences in costs. Some of the most common usecases and mistakes we have seen are:

- Realtime Inference: In this case, the model inference latency is under 1-2 seconds (often in the order of milliseconds) and the traffic volume is high. The model should be deployed as real-time autoscaling API and we have usually found requests/second to be a better metric for autoscaling than CPU or memory in a lot of ML usecases. If the traffic volume is bursty (like 2-3 hours a day) and the model is small, serverless deployment (AWS Lambda) turns out to be a more cost-effective mode of deployment.

- Async Inference: In this case, the processing logic can take a few seconds and a queue needs to be provisioned for better reliability - otherwise, it leads to failures and loss in business outcomes. For autoscaling, queue length is a good metric in these usecases.

- Multiple small models with bursty traffic: This is a common usecase in many companies where models are customer-specific. In this case, there are a large number of models and each of them receives a low amount of traffic and the latency expectations are quite low. In this case, one container hosts multiple models and the models are loaded and unloaded dynamically in memory based on need. There are challenges here related which models are already loaded in which pods and the requests need to be routed accordingly.

- Resource-intensive infrequent processing/cron jobs: This can be the usecase if models are trained dynamically based on some user actions. In this case, async inference might not work since the processing job can take order of minutes. In this case the processing should be submitted as jobs to a workflow orchestrator and the real-time updates of the job should be updated to a notification queue.

Often times, modelling one of the usecases in a different architecture can lead to loss of reliability or additional latency or large cloud bills.

Implement Autoscaling

People assume autoscaling to be useful only when there is a high volume of traffic and machines need to be scaled up or down based on the incoming traffic. However, we also want to extend the concept of autoscaling to dev development environments to save cost. Some areas where autoscaling can help save cost drastically are:

- Scale your compute requirement automatically based on demand: Leverage the auto-scaling capabilities to dynamically adjust your infrastructure's size based on workload demands. By automatically scaling up or down, you can optimize resource utilization and reduce costs during periods of low activity. Identify appropriate scaling thresholds and triggers based on your workload patterns to ensure optimal resource allocation.

- Scale down infra to zero when not in use (e.g. dev instances at night): Some of the infra in an org is not needed when there are no users. Implementing a system of scaling the compute infrastructure to zero when unneeded can generate a significant amount of savings for an organization.

- Apply for instance reservation with your cloud provider: Applying for reserved instances with your cloud provider can get you good discounts where you have a more predictable compute requirement.

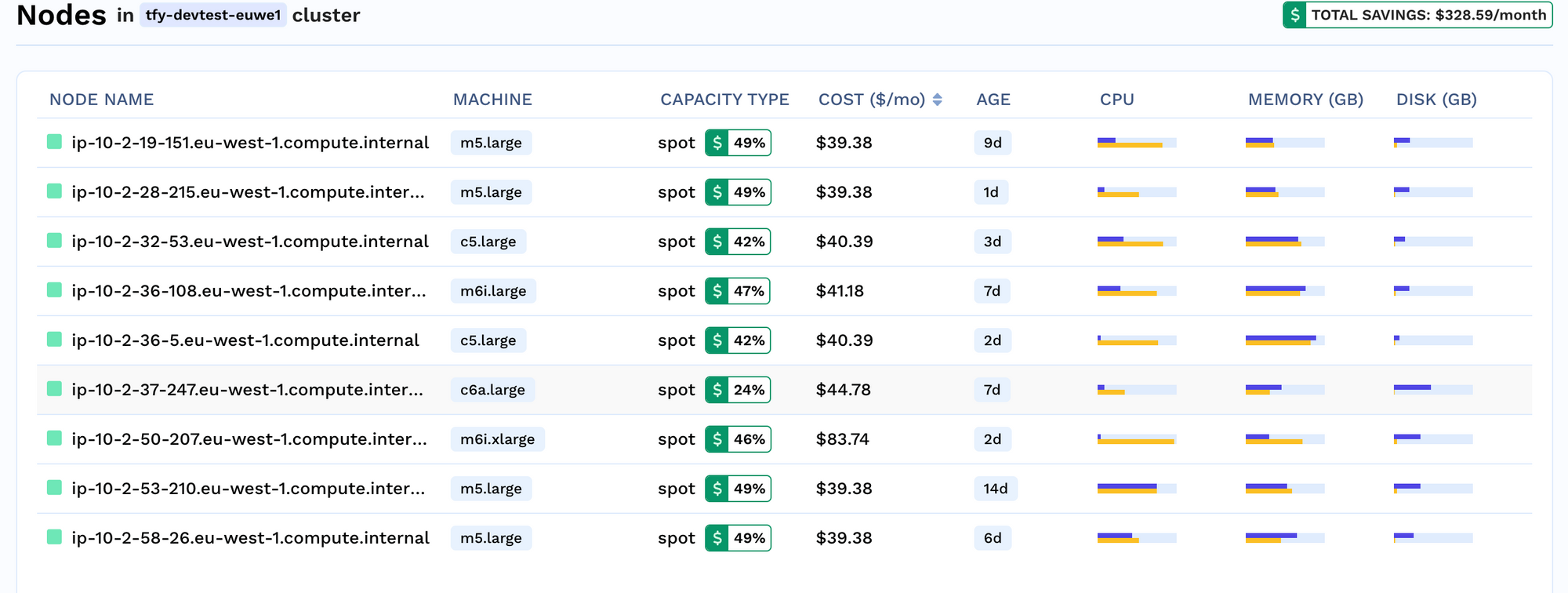

- Enforce spot instance usage for stateless workloads: Take advantage of spot instances (AWS) or preemptible VMs (Google Cloud) for fault-tolerant ML workloads. These instances are significantly cheaper than on-demand instances, allowing you to save costs by bidding on unused capacity. However, be mindful of the potential, for instance, termination, and design your infrastructure to handle interruptions gracefully. To show the different in the prices of on-demand and spot instances, let's compare the prices of T4 GPU per month in AWS and Azure.

g4dn.xlarge : $383 (On-demand) vs $115(Spot)

NC4as T4 v3: $383 (On-demand) vs $49 (Spot)

Compute and data colocation

Its important to colocate the data and compute so that we don't incur a lot of ingress/egress costs. Usually training processes involves downloading the data to the machines where the model is being trained. A few things to take care of here to avoid unexpected costs are:

- Share data across multiple datascientists: Its important to keep a single copy of the input training data that can be used by different datascientists instead of making one copy for every datascientist. This can be achieved by mounting a readonly volume with the data in all the training machines.

- Delete unused volumes: Often we forget to delete the volumes that we might have created which will lead to the dangling volumes lying around incurring costs.

Auto-shutdown notebooks based on inactivity

Oftentimes, Data Scientists start a VM, set up Jupyter Notebook there, or use it via SSH in VSCode. While this approach works, it often leads to developers forgetting to shut down the VMs when they are done working. This leads to a lot of drainage in costs. It is worth investing in auto-shutdown hosted notebooks once the DS team grows to more than 5 members.

Efficient GPU Utilization

GPUs are heavily used in ML, however in very few cases, are GPUs used efficiently. This article sheds excellent light on how GPUs are mostly used today and the inefficiencies. Sharing GPU between workloads, and efficiently batching techniques are essential to utilize GPU efficiently.

How can TrueFoundry help in reducing costs?

TrueFoundry has helped save a min of around 40% on infrastructure costs for all its customers.

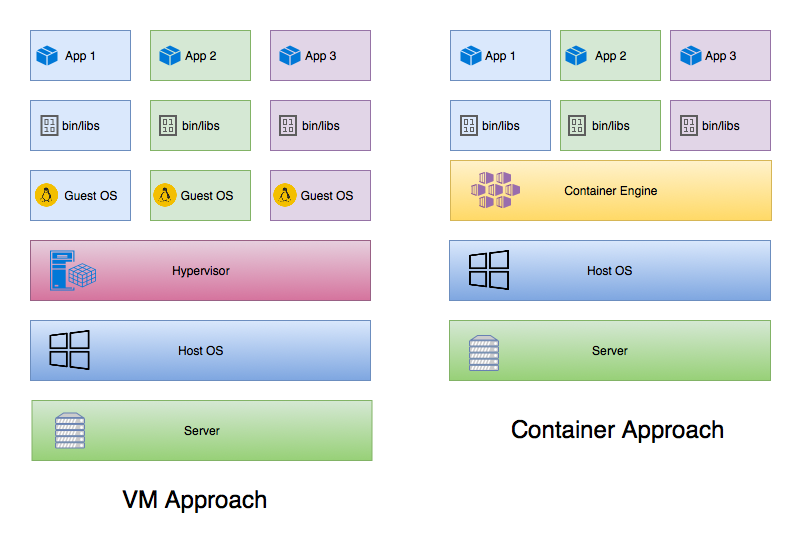

We use Kubernetes

Kubernetes helps reduce cost by efficiently binpacking workloads among the nodes and making sure the cluster is being used effectively. This is a great article that sheds more light on how Kubernetes helps save costs

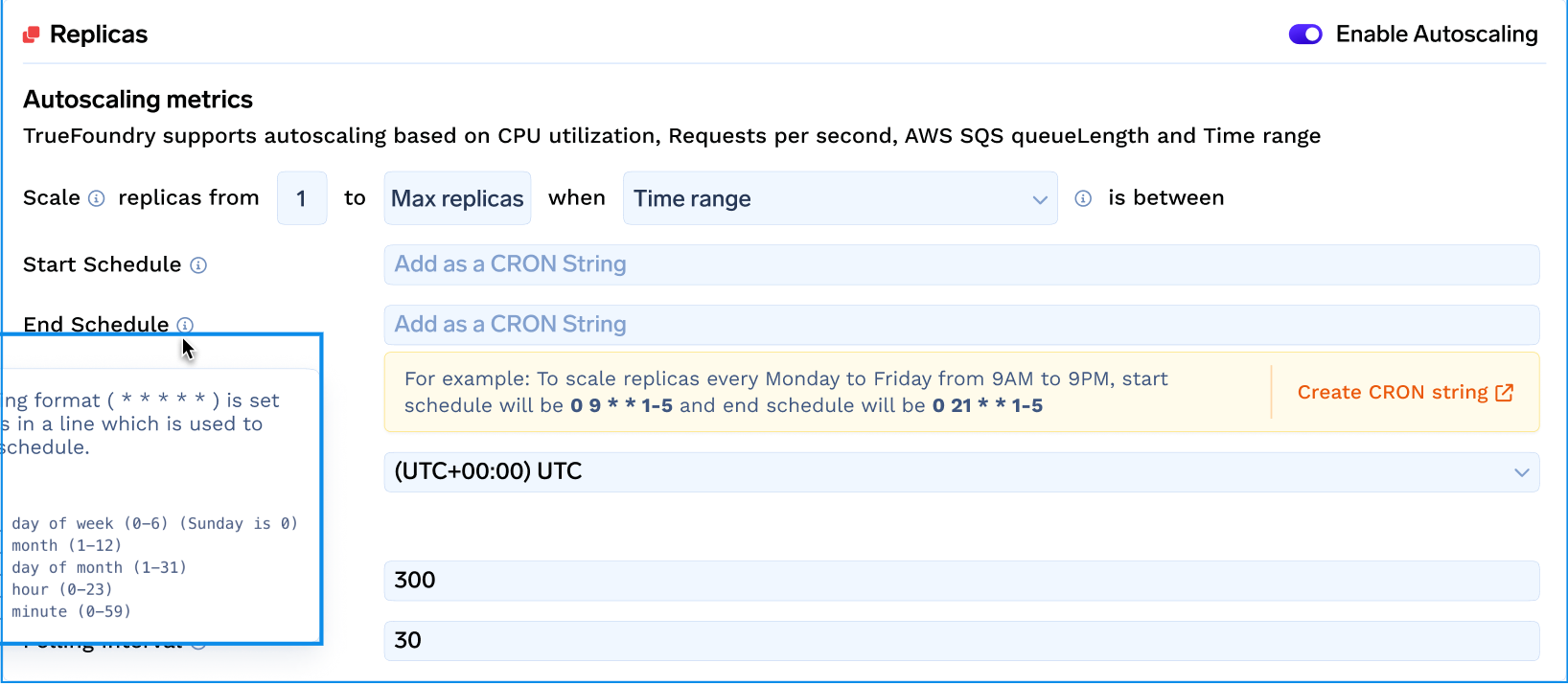

Save Dev Costs by 60% using Time Based Autoscaling

TrueFoundry makes it really easy to shut down your dev instances using the time-based autoscaling feature. Developers mostly work for around 40 hours a week, whereas the machines run almost 128 hours a week. If we were to shut down the machines effectively, we could save around 60% of the cost.

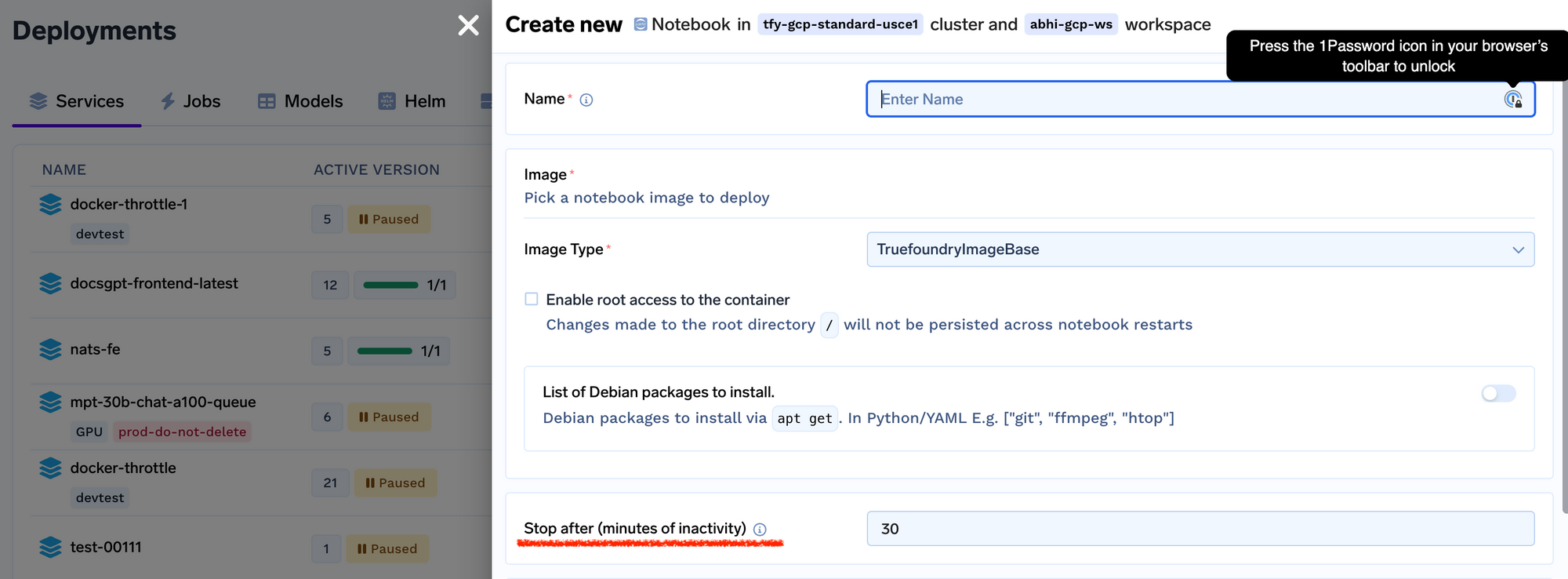

Auto-shutdown notebooks based on inactivity

Truefoundry allows data scientists to configure an inactivity timeout period on every notebook after which the notebook will automatically be switched off.

This helps save a lot of the costs specially if notebooks are running on GPUs.

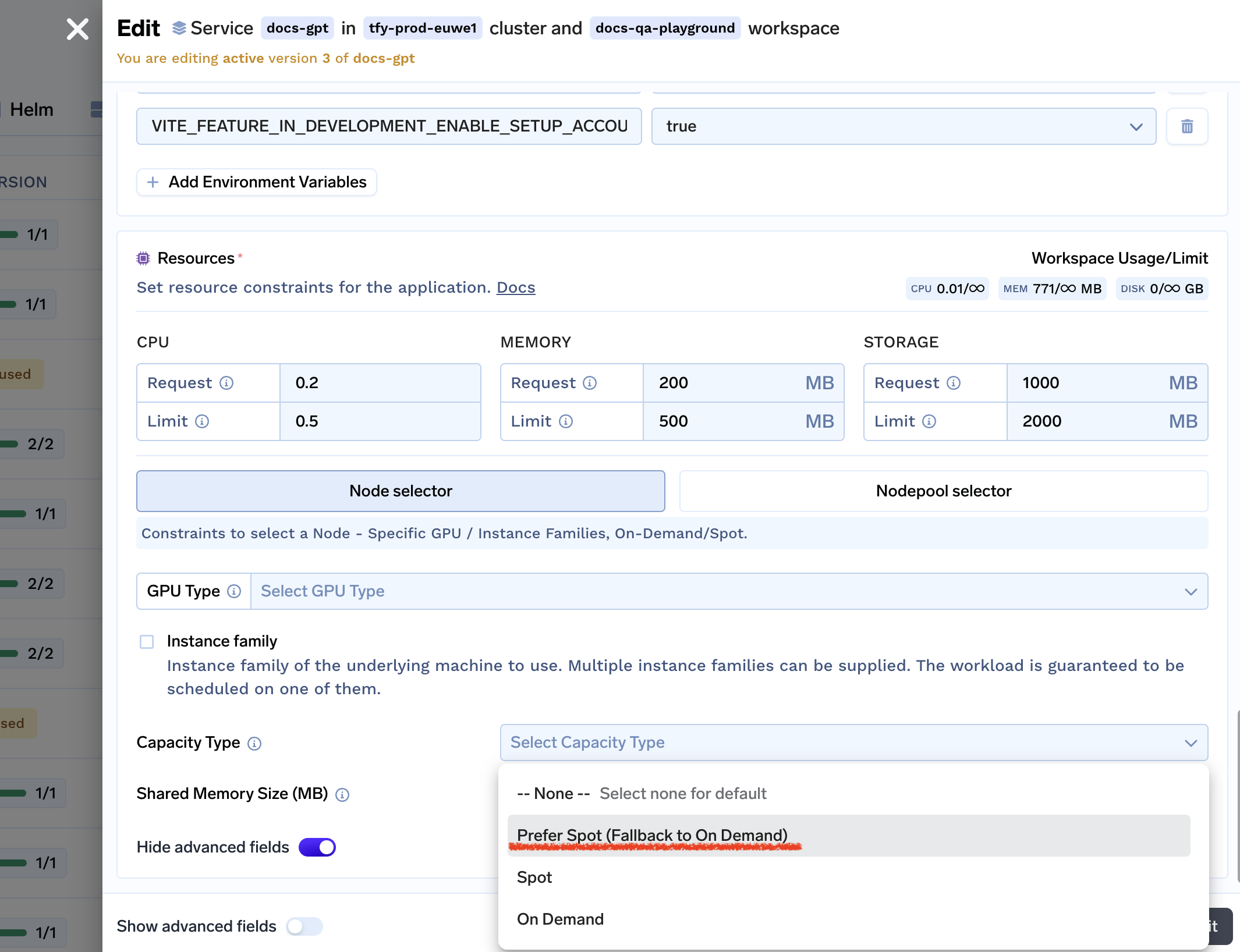

Easy to use spot instances

Truefoundry makes it really easy to use spot / on-demand instances for developers. Developers and data-scientists know their applications the best - so we leave it to them to decide the best choice for their applications.

It also shows you the cost tradeoffs between spot and on-demand instance for you to make the right choice according to your usecase.

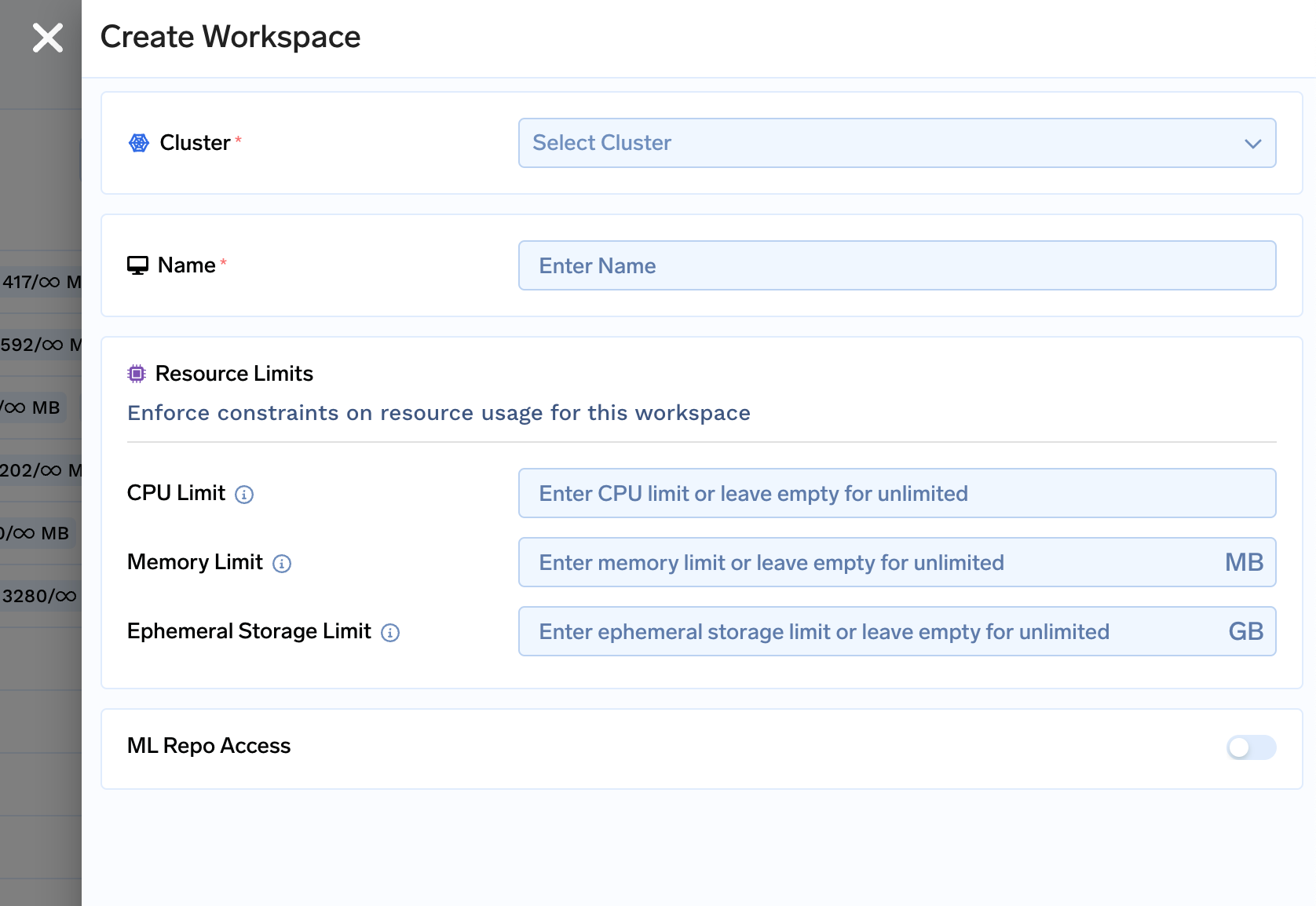

Resource quotas for teams / developers

Truefoundry allows you to set CPU, memory and GPU quotas for different teams and developers which allows leaders to have a sense of the cost allocation across teams and also guard against mistakes by developers by not allowing them to go beyond the allotted limits.

Insights related to Cost Optimization

Truefoundry automatically shows you the recommended cpu and memory resources for your service by analyzing the consumption of the service over the last few days. It currently recommends you the suggested cpu, and memory requests and limits - however, we also plan to automatically recommend you the autoscaling strategy, the correct architecture in the future.

Take the Assessment

Do you want to assess how can you optimise AI workload cost, we have created an easy-to-take 5-minute assessment.

We promise to share the personalised report with you.