Scaling Up serving of Fine-tuned LoRA Models

In the realm of AI application deployment, scaling the serving of finetuned models has emerged as a pivotal challenge. Consider a scenario: you're a SaaS startup catering to 500 customers with diverse needs, requiring fine-tuned Language Model (LLM) adaptations for internal support and personalized marketing content. This entails the daunting task of finetuning around 1000 models.

To tackle these limitations, enter LoRA Finetuning—a technique that involves freezing the core LLM and selectively refining specific weights to accommodate updated data. This approach, efficient and scalable, operates on a fraction of the original parameters defined by the LoRA Configuration.

However, the real challenge arises in deploying these finetuned adapters effectively.

Experiment Setup

Numerous open-source LLM serving frameworks are actively working to incorporate LoRA adapters serving, but a comprehensive solution remains elusive. In this experiment, we explore LoRAX by Predibase, a promising framework that seems to have efficiently cracked the code for serving and scaling LoRA adapters.

Key Features of LoRAX

LoRAX stands out with three fundamental pillars of implementation:

- Dynamic Adapter Loading: Allows just-in-time loading of fine-tuned LoRA weights, ensuring no disruption to concurrent requests at runtime.

- Tiered Weight Caching: Facilitates swift swapping of LoRA adapters between requests, preventing out-of-memory issues by offloading adapter weights to CPU and disk.

- Continuous Multi-Adapter Batching: Employs a fair scheduling policy to optimize overall system throughput, extending continuous batching strategies across multiple sets of LoRA adapters in parallel.

Performance Evaluation with LoRAX

In our experiment, we extensively evaluated the performance of LoRAX in managing an extensive array of fine-tuned adapters using the TinyLlama LLM model. Throughout our experiments, we gauged its efficiency in processing numerous requests across multiple adapters.

Prerequisites for LoRAX Setup

To replicate our experiments, follow these prerequisites to set up LoRAX on your server:

docker run \

--gpus all \

--shm-size 1g \

-p 8080:80 \

-v $PWD/data:/data \

ghcr.io/predibase/lorax:latest \

--model-id TinyLlama/TinyLlama-1.1B-Chat-v0.6 # base model used for finetuning

Additionally, install the LoRAX client for inference calls by using:

pip install lorax-client

Inference Insights

Leveraging LoRAX for serving LoRA fine-tuned models provided distinct advantages owing to its capacity to utilize a single GPU for the base LLM model while dynamically loading each adapter per request. This dynamic approach—employing a single GPU and loading adapters on the fly—positions LoRAX as an optimal solution for serving a multitude of fine-tuned LoRA models.

Inference Code Example:

from lorax import Client

client = Client("https://example.lorax.truefoundry.tech", timeout=10)

generated_text = client.generate(

prompt,

do_sample=False,

max_new_tokens=10,

adapter_id=adapter_id,

adapter_source="local"

).generated_text

Our experimental findings conclusively demonstrated the substantial benefits of employing LoRAX for serving fine-tuned LoRA models. Through dynamic adapter loading per request and effective utilization of a single A100 GPU for the base LLM model, LoRAX remarkably optimized performance.

Performance Insights

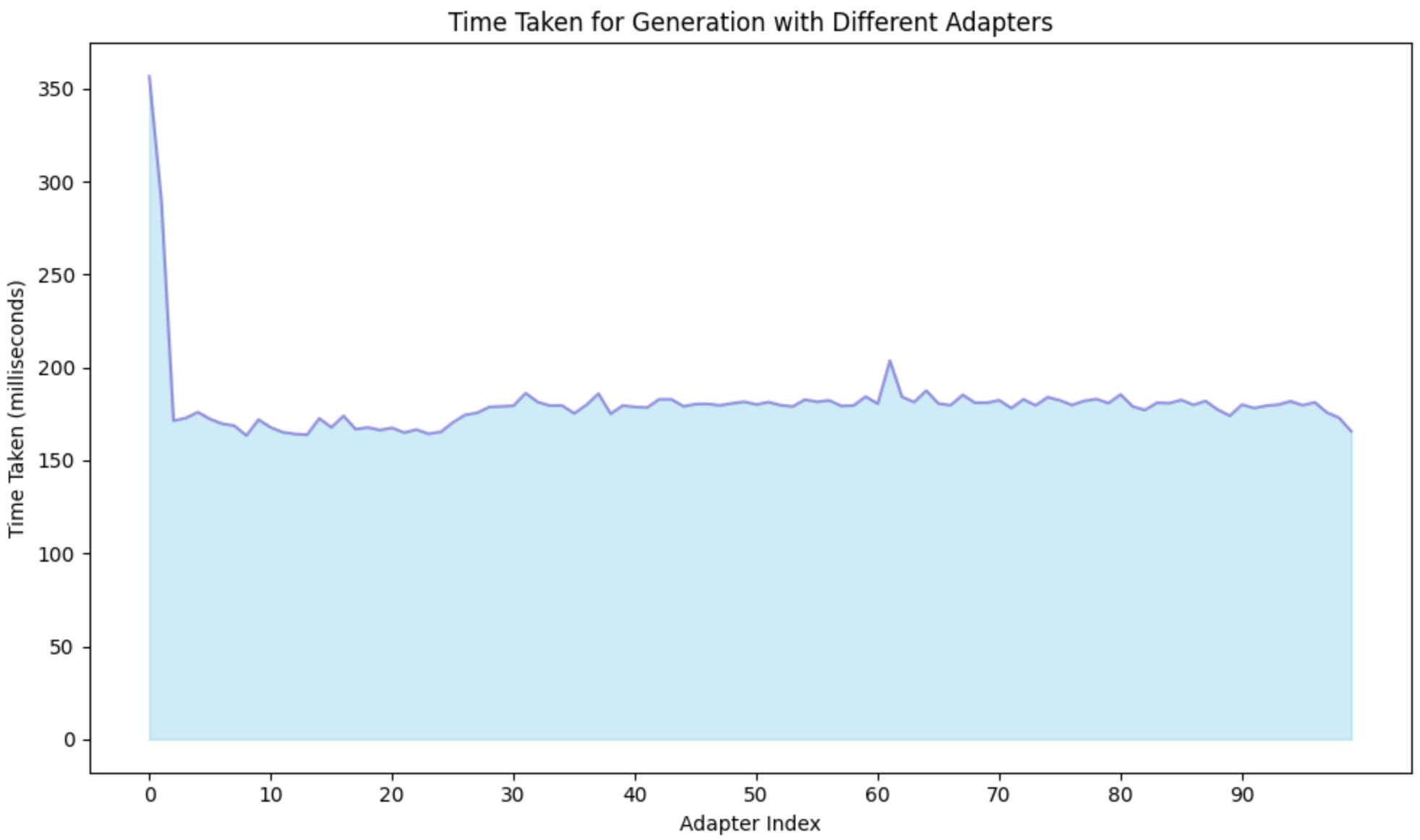

In our recent experiments involving the dynamic loading of 100 adapters, we closely examined their performance characteristics. The initial latency observed during the first request primarily stemmed from the necessity to load the base LLM model into memory. However, subsequent requests showcased a reduction in latency due to LoRax's ability to swiftly swap adapters and perform real-time merges for predictions.

Previously, we discussed the process of loading Lora fintuned models using HuggingFace PEFT in our blogs. However, our experiments highlighted significant issues with this approach:

1) Latency Escalation with Increased Adapters on GPU Memory:

The foremost problem surfaced as we attempted to fit more adapters into GPU memory. This led to a notable spike in latency. With each additional adapter, the latency experienced a considerable surge, thereby impacting the overall performance.

2) Limited Capacity in GPU Memory:

Another critical limitation was the finite set of adapters that could be accommodated within GPU memory. This constraint hindered scalability and posed a bottleneck in accommodating a larger number of adapters simultaneously.

To further illustrate the performance differences, consider the following comparisons:

Processing 70 Tokens and Generating 10 Tokens on an A100 GPU:

HuggingFace PEFT: Latency ranged from 400-450 ms.

LoRAX: Demonstrated an average latency of 170ms.

Impact of Adapters on Latency:

HuggingFace PEFT: Adding 1000 tinyllama Lora adapters to the base LLM surged the latency to 3500-4000ms, marking an approximately 700% increase.

LoRAX: Latency remained consistently unaffected by the number of adapters, thanks to its dynamic loading mechanism on the GPU, ensuring stable latency even with a higher number of models.

In summary, while HuggingFace PEFT facilitates model adaptation and adapter utilization, it struggles with performance challenges as the number of adapters increases, notably affecting latency and scalability. In contrast, LoRAX stands out for its ability to dynamically load adapters and execute predictions in real-time, ensuring consistent performance and scalability, even with a substantial number of Lora finetuned models.

Conclusion

LoRAX's prowess in efficiently handling diverse finetuned LoRA models while maintaining a consistent base model bodes well for businesses seeking scalable AI services tailored to individual customer needs.

By leveraging LoRAX's efficient adapter loading strategies, businesses can confidently scale their services to encompass thousands of specifically tailored finetuned LoRA models, all while maintaining a unified base model.

In summary, LoRAX emerges as a game-changer in serving finetuned adapters, offering a scalable and optimized solution for businesses seeking to deploy diverse AI models effectively.

TrueFoundry is a ML Deployment PaaS over Kubernetes to speed up developer workflows while allowing them full flexibility in testing and deploying models while ensuring full security and control for the Infra team. Through our platform, we enable Machine learning Teams to deploy and monitor models in 15 minutes with 100% reliability, scalability, and the ability to roll back in seconds - allowing them to save cost and release Models to production faster, enabling real business value realisation.