Efficiently Serving LoRA fine-tuned models

In the domain of language models, the practice of fine-tuning is widely adopted to tailor an existing language model for specific tasks and datasets. There are a lot of good resources online that describe what is fine tuning and what are the different parameter efficient techniques for it. This blog assumes an understanding of fine-tuning & gives a very brief overview of LoRA. The focus here will be serving LoRA fine-tuned models, especially, if you have many of them.

Example Scenario: SaaS company fine-tuning a model per customer per task

While there are many examples where you would need to serve many fine-tuned models- one common use case we see are SaaS startups who have customer specific data and want to fine-tune models per customer. Obviously, this scales with the number of customers. Let’s put some numbers to it and evaluate our options.

Imagine, you are a SaaS startup with 500 customers, and you have 2 tasks where you want to fine-tune your LLMs: first is internal facing, where you want to consume their internal data and help the support agents answer queries and the second is where you want to send out marketing content that is aware of their brand voice. This means you’ll end up fine-tuning 1000 models.

Approach 1: Full parameter fine-tuning Llama 2–7B

Holding a Llama 2 7B model in memory in 16-bit precision would require a minimum of 14GB GPU RAM. Storing 1000 such models would require a minimum of 14000GB of GPU RAM which is 350 instances of A100–40GB GPUs, costing you a whopping $1M / month — assuming no redundancy in the system.

Approach 2: Full parameter fine-tuning Pythia–1B

You could argue that if you are fine-tuning, you might as well choose a smaller model — say Pythia 1B. This requires 2000GB GPU RAM, which is 50 instances of A100–40Gb, costing you ~$150k / month. This would likely have an impact on the quality of your responses as well, and most likely, if you have a new task on similar data, you’ll not be able to generalise.

Approach 3: LoRA fine-tuning Llama 2–7B

Another approach you could do is LoRA fine-tune 500 variants where each delta weight would consume about 200MB, so effectively, you would need <120GB of GPU RAM, which is 3 instances of A100–40GB, costing you about $8k. This would also ensure similar quality as Approach #1 and much better than Approach #2.

Approach 4: QLoRA fine-tuning Llama 2–7B with hot swapping

In a practical situation, you don’t expect equal traffic from all 1000 of your customers. You could arguably hold in memory say 100 most frequently queried customers and build some sort of LRU cache and hot swap models with new requests coming in. If you are holding 100 models in memory, then you need 14 GB (for base Llama 2) and 20GB (for 100 fine-tuned models) which can be served in a single instance of A100–40Gb costing < $3k each month. Infact, we use QLoRA where due to quantization, we can even hold a lot more models in the memory compared to LoRA, making it even more cost efficient.

Practically speaking, you don’t go from Approach 3 to Approach 4 to save 3X the cost but because it is much more efficient. You can scale infrastructure for customers with higher traffic more easily, and this also scales much better with your number of customers and number of tasks. You would take a small hit on loading and unloading these models in terms of latency.

For the rest of this blog, we will share details on Approach #4 which we believe is the most cost-efficient and practical way of scaling fine tuning.

LoRA Fine-tuning 101

Instead of iteratively updating the weight matrix W during fine-tuning for various tasks, LoRA proposes to store all these changes in a separate matrix, ΔW and freeze the original weight matrix (W) of the base model. This ΔW essentially acts like an adaptable lens that can be attached or detached from the base model as needed, allowing seamless task switching during inference. LoRA reduces the memory footprint of ΔW by utilizing a low-rank approximation (decompose the ΔW to Wa x Wb with the matrix rank specified), to further optimize the resource and memory footprint.

Therefore the updated weights will be W = W + ΔW (Wa x Wb)

To further enhance memory efficiency, Quantized LoRA (QLoRA) has been introduced. QLoRA loads the pre-trained model into GPU memory with quantized 4-bit weights (abbreviated as NF4), compared to the 8-bit or 16-bit weights used in LoRA. Despite this reduction in precision, QLoRA maintains performance levels similar to LoRA. For more technical details on 4-bit quantization, read here

LoRA, often denoted as ΔW, can be thought of as detachable layers that seamlessly integrate with a base model.

Experiment Setup

For our experiments, we’ve fine-tuned Llama2 for various tasks, such as delivery entity Named Entity Recognition (NER), medical tasks, Turkish translation, and even the alpaca dataset. We accomplished this fine-tuning using the QLoRA approach with BitsAndBytes and the PEFT library.

Our experiments are centered on understanding the resource and memory usage during these attach or detach operations of LoRA weights. We’ve painstakingly scrutinized any potential performance drops in the base model, disk usage, and the time taken for these operations.

Getting into the details

Here are some example code snippets for attaching and detaching LoRA weights to the base model that has been initialized.

# initialize the base llama2-7b instruct model

base_model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-chat-hf",

torch_dtype=torch.bfloat16,

device_map=0)

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-chat-hf", device_map=0)

You can attach LoRA (ΔW) weights using .load_adapter(model_path, adapter_name) method,

# initialize a PeftModel and add multiply adapters to the base model using `.load_adapter.

model = PeftModel.from_pretrained(base_model, "./results/ner", adapter_name="adapter1", device_map=0)

model.load_adapter("./results/alpaca", adapter_name="adapter2", device_map=0)

model.load_adapter("./results/translate", adapter_name="adapter3", device_map=0)

All the adapters were finetuned with the same parameters (i.e., lora rank, targeting attention modules (q_proj, k_proj, v_proj, o_proj).

All the adapters were fine-tuned with the same parameters, namely LoRA rank of 64, targeting attention modules (q_proj, k_proj, v_proj, o_proj).

# adapter config for finetuned model

LoraConfig(lora_alpha=16,

lora_dropout=0.1,

r=64,

bias="none",

task_type="CAUSAL_LM",

target_modules=["q_proj","k_proj","v_proj","o_proj"])

- target_modules: The modules (for example, attention blocks) to apply the LoRA update matrices.

- r: rank of update matrices, lower rank results in smaller update matrices with less trainable parameters.

Notably, adding all linear layers, along with attention blocks, can improve model accuracy beyond a certain point.

r denotes the rank of the low-rank matrices that are acquired during the process of fine-tuning. As ‘r’ increases, the number of parameters that require updating in the low-rank adaptation also rises. In simple terms, a lower ‘r’ value can expedite the training process, making it less computationally demanding. However, this might come at the cost of model quality. Nonetheless, increasing ‘r’ beyond a certain point may not yield any noticeable improvement in the model’s performance.

During the fine-tuning with LoRA (Low-Rank Adaptation), it’s possible to focus on specific modules within the model’s architecture. The adaptation process is tailored to these modules, applying update matrices accordingly. Traditionally, practitioners tend to target only the attention blocks of the transformer, as this strikes a balance between adaptation quality and computational resources. However, recent research, such as the QLoRA paper authored by Dettmers et al., suggests that targeting all linear layers can result in superior adaptation quality.”

Benchmarks

Now, let’s talk about the resource requirements. LoRA parameters like r, and target_modules have direct effect on resource requirements and performance of the fine-tuned model.

Here’s a breakdown:

- GPU Resource Management: Initially, our Llama2 base model, with

bfloat16precision, consumed approximately 13.7GB of our 40GB GPU. After allocating this, we were left with ample space. And guess what? We successfully accommodated an impressive total of 95 adapters within the remaining GPU memory! - Disk Space Considerations: With an

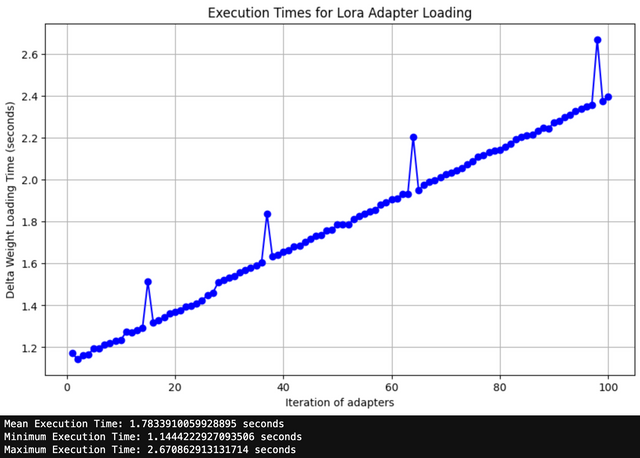

rrank set to 64 and targeting the quartet modules –q_proj, k_proj, v_proj, o_projfor LoRA – the model gobbled up nearly 250MB on the disk. A jump in rank, to 256, expanded the model’s footprint to hover between 1 and 1.2GB. Interestingly, by focusing solely on theq_projandv_projmodules, the space requirement for anrrank of 64 plummeted to around 130MB. Quite efficient, isn’t it? - A Glance at GPU Loading Dynamics: Every adapter, considering the aforementioned LoRA parameters, required about 250MB of our GPU memory for integration. However, the very first adapter was an exception, eating up around 500MB. Now, here’s a quirky observation: the GPU memory-loading time for each subsequent adapter gradually escalated. Check out the graph below, which paints a vivid picture of the increasing time durations for adapter loads.

When working with model updates, a common task is to swap out a model’s weights with an updated version. Let’s consider two ways of achieving this: directly updating the adapter weights or deleting and then re-loading them.

Here’s an example code snippet for the same:

# updating the adapter with the same name, we can replace its weights.

model.load_adapter("./results/multiply", adapter_name="adapter3")

(or)

# Delete the adapter from memory

model.delete_adapter("adapter3")

# Release the GPU memory

torch.cuda.empty_cache()

# Load the updated adapter

model.load_adapter("./results/multiply", adapter_name="adapter3")

In our observations, updating the weights with the same adapter name proved to be much quicker, taking about 200–300 ms. In contrast, the delete-and-re-load operation was comparatively slower.

Till now, we’ve delved into attaching, detaching, and modifying the LoRA (ΔW) weights. Let’s move forward and explore how to activate a specific adapter and then use it for inference:

# Set the adapter for the model

model.set_adapter("adapter2")

# Perform inference using the activated adapter

prompt = "...."

inputs = tokenizer(prompt, return_tensors="pt").to("cuda")

outputs = model.generate(**inputs, max_new_tokens=64, do_sample=True, top_k=50)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

If you wish to temporarily bypass the adapters and perform inference using only the base Llama model, the disable_adapter context manager comes in handy:

with model.disable_adapter():

output_enabled = model.generate(**inputs, max_new_tokens=1024, do_sample=False)

print(tokenizer.decode(output_enabled[0], skip_special_tokens=True))

# Check which adapter is currently active

print(model.active_adapter)

While experimenting with the Llama2 model, we observed some intriguing patterns in inference times. Here’s a breakdown:

Using the base Llama2 model with parameters set to max_new_tokens=64, do_sample=True, and top_k=50, the inference time averaged around 2800ms when using the HuggingFace Transformers library. On attaching an LoRA adapter ΔW to the base model, we witnessed a 45% spike in the inference time, pushing it up to approximately 4100ms. This increase can be attributed to the added computational overhead due to the runtime weight updates that LoRA adapters introduce.

An interesting observation is that even when multiple adapters (say, 100 adapters) are stored in memory, the inference time remains consistent at 4100ms. This consistency is due to the fact that only one adapter is activated (using set_adapter) for inference, regardless of how many are available in memory.

A significant finding is that if you decide to integrate the adapter into the base model permanently using the merge_and_unload method rather than keeping it detachable, the inference time reverts back to the original 2800ms, due to merging of weights the runtime computation overhead is removed. Here’s a sample code:

model = PeftModel.from_pretrained(base_model,

"./models/ner",

adapter_name="ner",

device_map="auto")

# Fully merge the adapter weights onto the base model

model = model.merge_and_unload()

Further Inference Optimization

In technical terms, one could further optimize these inference times using DeepSpeed, although delving into this is beyond the purview of this article.

After applying DeepSpeed optimization, the model’s inference time has been slashed dramatically from 2800ms to just 800ms. This is a whopping 70% reduction in inference time. However, as of now, DeepSpeed hasn’t integrated support for multiple PEFT adapters. This means the only feasible approach to conduct inference with LoRA weights is to merge these weights with the base model. This streamlines the pipeline for swifter inference. Below is a code snippet for this process:

# load finetuned adapter weight onto base model

model = PeftModel.from_pretrained(base_model,

"./models/ner",

adapter_name="ner",

device_map="auto")

# merge the adapter weights to base model

model = model.merge_and_unload()

# optimize the model using deepspeed

ds_engine = deepspeed.init_inference(model,

mp_size=world_size,

dtype=torch.bfloat16,

checkpoint=None,

replace_with_kernel_inject=True)

model = ds_engine.module

For those eyeing a production scenario, it would be prudent to consider a more efficient inference server. An ideal recommendation would be HuggingFace Text Generation Inference. It’s particularly geared for handling extensive workloads. However, it’s worth noting that the support for multiple LoRA adapters on this platform is still under development.

In conclusion, LoRA and QLoRA are game-changers in fine-tuning language models, allowing you to tailor models for diverse tasks efficiently. So, embrace LoRA’s detachable layers and QLoRA’s quantized finesse for your next language model adventure!