SSH Server Containers For Development on Kubernetes

Last year, we discussed running Hosted Jupyter Notebooks and VS Code (Code Server) on your Kubernetes Clusters. We compared several approaches and existing solutions including renting and managing VMs. We then described our approaches to address usability issues to make the experience nicer and abstract away Kubernetes details.

Since then we have received lots of feedback from our customers, primarily the lack of a better development experience for full-fledged apps. While Jupyter Lab is great for interactive notebooks and lightweight editing, pushing it to full IDE capabilities might require a lot of fiddling with Jupyter Extensions and it still might not be a great experience for non-Python codebases. To address such shortcomings we launched Code Server support which is more or less VS Code in the browser. While it is a zero setup solution and eases out many Developer Experience problems with Jupyter Lab, users reported friction when working with VS Code extensions.

For example, Pylance, the extension that provides excellent Python language support in VS Code cannot be installed on Code Server because of a Microsoft proprietary license. Instead, users have to rely on a combination of Jedi and Pyright which are still not up to the mark with Pylance. Yet another example is Github CoPilot - while it is possible to install it on Code Server it requires manually fiddling with the extension file and upgrading the Code Server version.

While the Code Server editing experience is not bad, sometimes there is a noticeable lag and jumbled-up text in the terminal which can be annoying. We always knew connecting local VS Code with remote VS Code Server via SSH or Tunnels would be a better experience.

The goal is to allow the users to create a deployment running OpenSSH Server in an Ubuntu-based container image with the same disk persistence as Jupyter Lab and let the users connect to it.

Here is how it looks on the platform (docs):

In this post, we'll walk over how we implemented connecting to containers via SSH without providing direct access to the cluster and without sending traffic outside the VPC.

Istio and Routing

When we deploy applications, we usually configure a domain name to reach those services, but buying a domain for each application is prohibitively expensive. Instead we buy a single domain (e.g. acmecorp.com), configure subdomains (docs.acmecorp.com) and/or path prefixes (acmecorp.com/blog/) and then use a router to match rules and route traffic to different applications.

We use Istio for all our ingress routing. Istio, among many features, offers convenient abstractions to configure the underlying Envoy proxy that is actually handling all the routing.

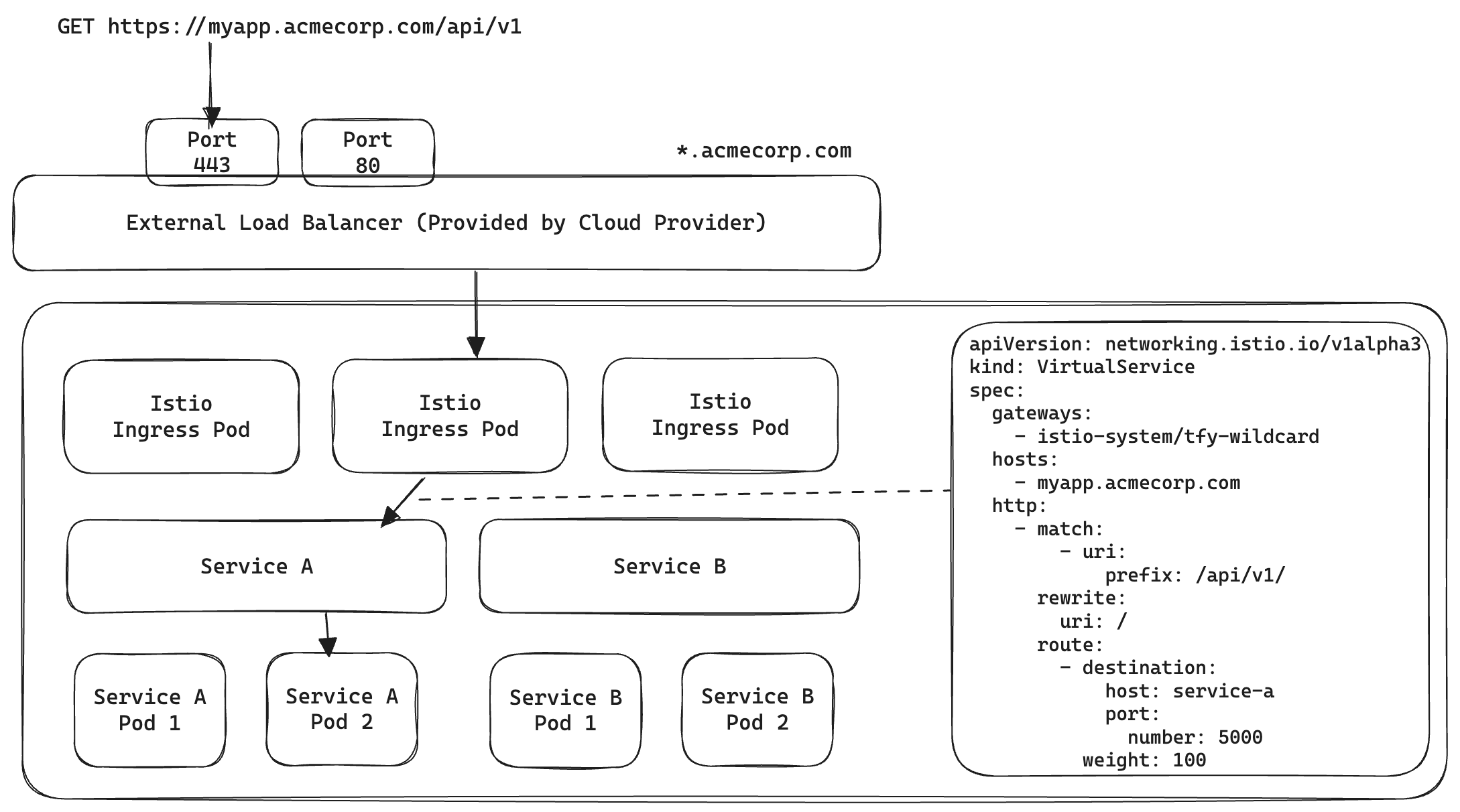

Let's understand how an HTTP request is routed

In the above when a user tries to fetch https://myapp.acmecorp.com/api/v1

- First, *.acmecorp.com is DNS resolved to the public IP of the external load balancer. The Port is inferred as 443 because of https protocol.

- A TCP connection is established to the load balancer and HTTP request payload is sent

- Load Balancer routes the request payload to Istio Ingress Pods

- Istio Ingress looks at all VirtualServicesconfigurations (and Gateway) configuration, matches the hostname (more importantly the subdomain myapp) and path prefix and routes to the corresponding Kubernetes Service

- Kubernetes routes the request to one of the Endpoints (Pod) for the Service

This is called Layer 7 Routing because we use actual fields from HTTP spec to do routing.

Routing in context of SSH

SSH uses a custom protocol that uses TCP for transport. A simple SSH connection looks something like the following

ssh user@somemachine.acmecorp.com -p 22

Here we are trying to connect to somemachine.acmecorp.com on port 22. Here somemachine.acmecorp.com:22 has to resolve to a unique IP address and port combination to reach the destination. But recall in our setup, all subdomains are configured to point to the same Load balancer - that means abc.acmecorp.com, xyz.acmecorp.com, somemachine.acmecorp.com all resolve to the same IP address and then Istio/Envoy is supposed to look at the subdomain and decide where to route. But in the case of SSH, this is not possible because after resolving the IP address and establishing a TCP connection, all Istio sees is the Load balancer IP and port number, the actual contents of the packets are being encrypted by SSH. So how can we route to multiple different SSH destinations in the cluster?

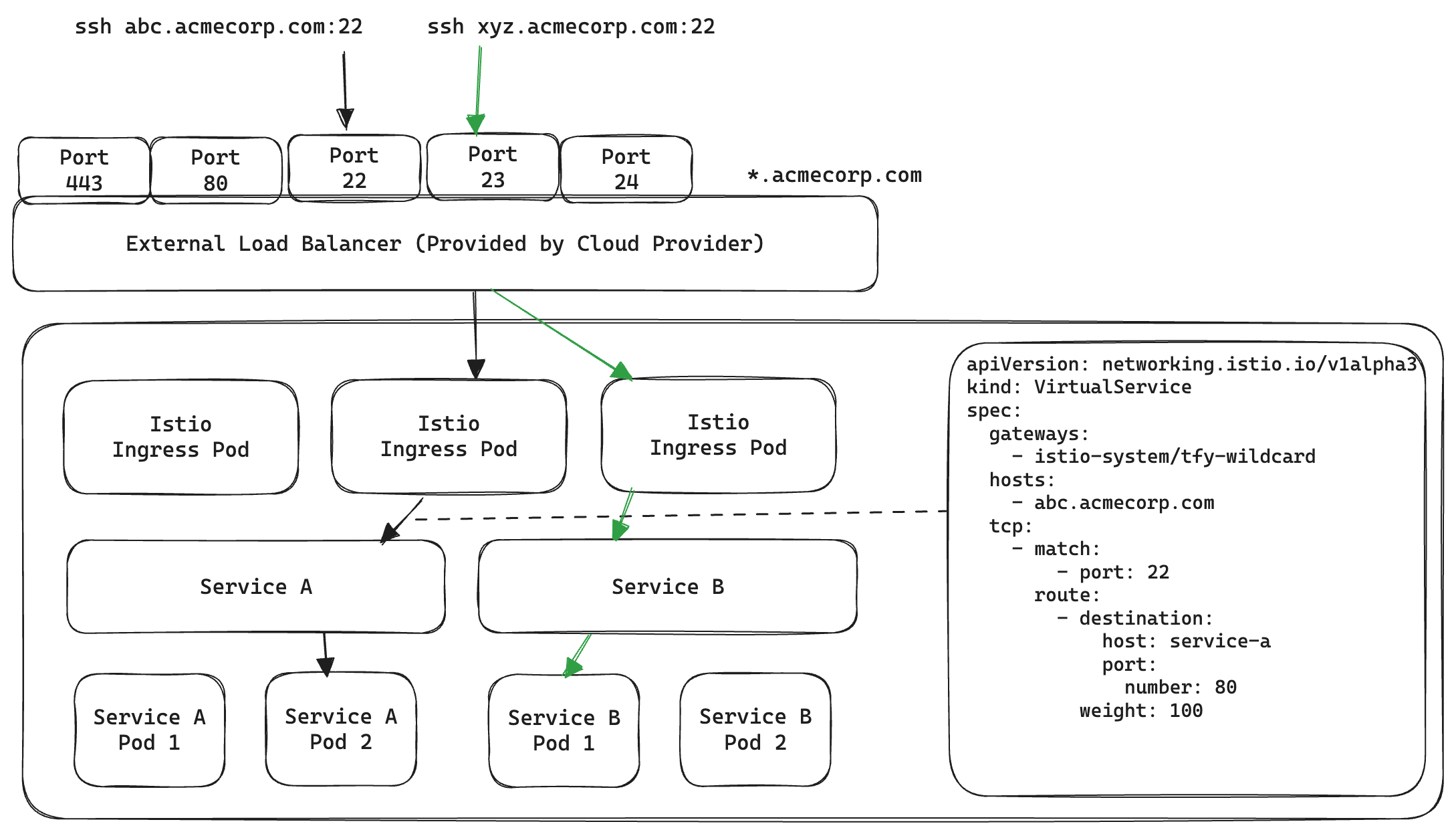

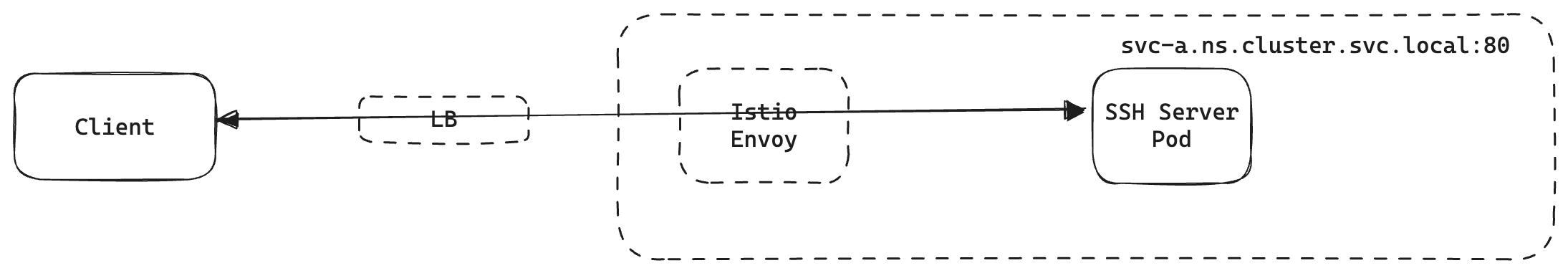

Option 1: Use unique ports on the same LoadBalancer

As we only need to ensure unique combinations of IP address and ports, so we can just assign different ports on the load balancer to unique SSH containers

We can then configure TCP Route port match using Istio

Here, all TCP traffic coming to port 22 of the LoadBalancer will reach Service A and all TCP traffic on port 23 will reach Service B.

While this works well, there are a few limitations

- A max of 65,535 SSH containers can be reached behind a single Load balancer. This is not a big deal because realistically we don't expect these many SSH containers deployed at the same time.

- The trickier problem is to accurately dynamically open up and free up ports on the external load balancer without ever disrupting any other normal traffic. While certainly possible, any bug or race condition could cause serious downtimes for other applications. Not to mention opening up arbitrary ports is a major security risk for many of our customers.

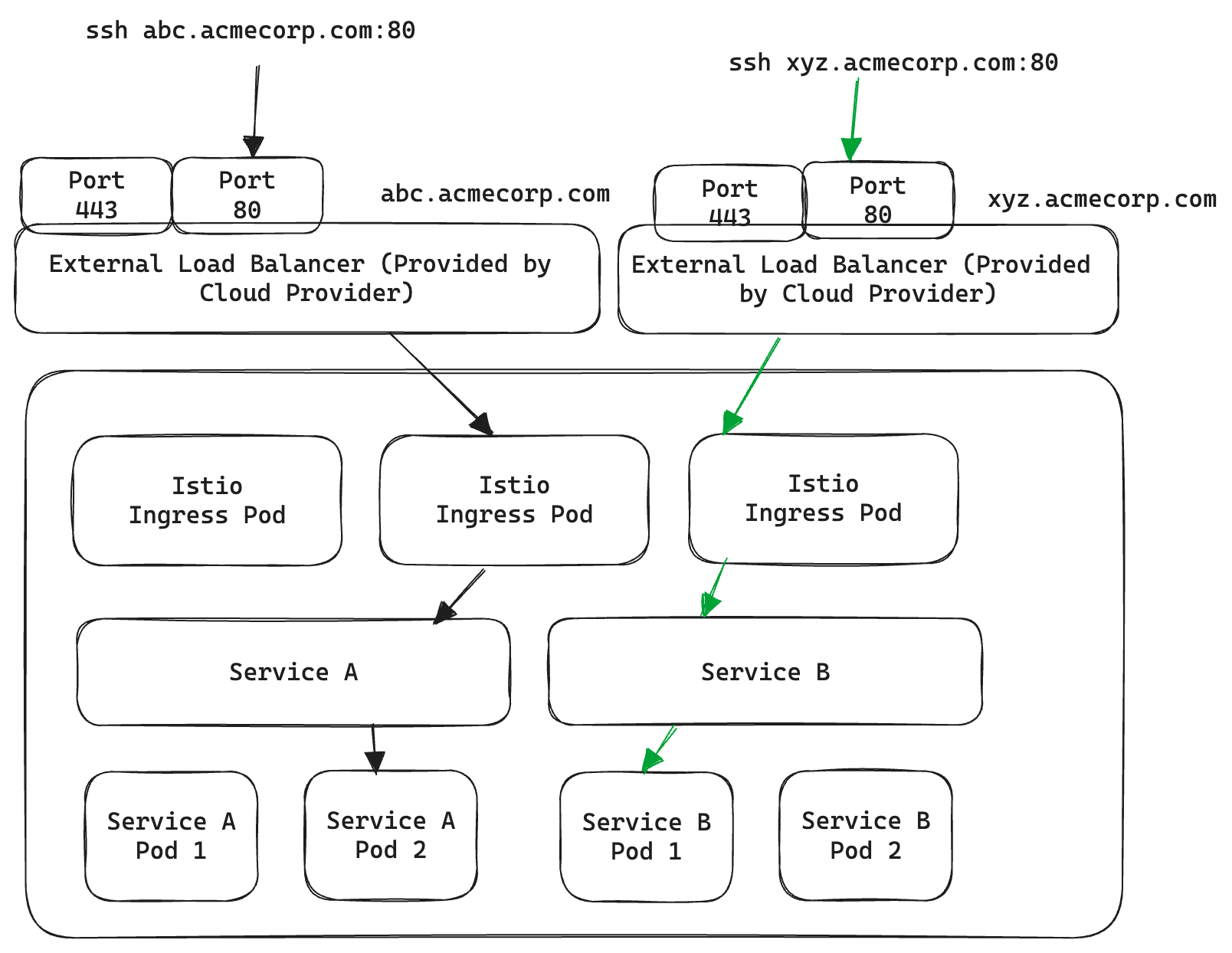

Option 2: Use a new LoadBalancer for each SSH container

In this case, we have explicitly pointed abc.acmecorp.com and xyz.acmecorp.com to two different external Load Balancers instead of wildcard *.acmecorp.com. Now they point to a unique IP address each and can be routed by two different Istio Gateway (one-to-one linked with an external load balancer). The obvious limitation here is provisioning a new load balancer per SSH container becomes prohibitively expensive.

Is there any way to take advantage of HTTP-level routing yet still only work with TCP traffic? Enter HTTP CONNECT!

Proxying using HTTP CONNECT

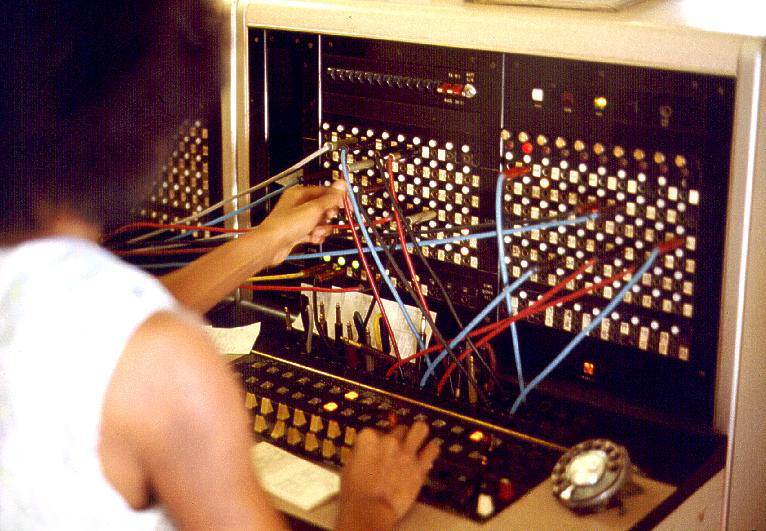

The HTTP CONNECT method allows establishing a "tunnel" between two destinations via a Proxy. Imagine the old days of telephone switchboards - you want to call a number but don't have a direct line to reach it, instead, an operator in between facilitates the connection on your behalf and then gets out of the way to let the two parties communicate.

We recommend watching the following video for a good explanation: https://www.youtube.com/watch?v=PAJ5kK50qp8

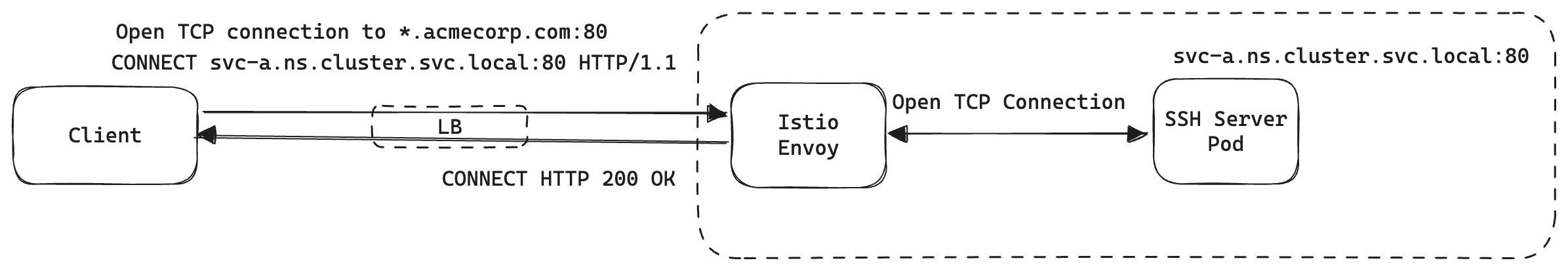

Fortunately, in our case, we already use a proxy capable of using CONNECT - Envoy Proxy. Let's look at how it would work in our use case:

- The client opens a connection to acmecorp.com:80 - the external load balancer which routes traffic to Envoy.

- The client sends an HTTP CONNECT request

CONNECT svc-a.ns.cluster.svc.local:80 HTTP/1.1

Host: svc-a.ns.cluster.svc.localwhich is instructing Envoy to establish a TCP Connection to svc-a.ns.cluster.svc.local:80 on their behalf

- Once a connection is established, A 200 OK is returned to the client.

- After this point, Envoy stops caring about the traffic contents and acts as a "tunnel" allowing the traffic to flow between the client and the pod. It can be anything that works on top of TCP including but not limited to SSH.

Note that svc-a.ns.cluster.svc.local:80 is a Kubernetes Service and does not point to any public IP address, rather it can only be resolved inside the Kubernetes Cluster. Since Envoy lives inside the cluster we can configure it to reach the pods behind it.

All that is left is to configure Envoy to do such routing. Unfortunately, Istio does not have high-level abstractions to configure this easily instead we have to apply patches to Envoy configuration using Envoy Filters

Envoy Filters

Understanding Envoy capabilities and Envoy Filters is out of the scope of this blog post but just take it as a convenient way to modify Istio routing rules using small patches. To enable CONNECT based routing we need to

- Have a publicly exposed port on LoadBalancer to accept TCP traffic (for e.g. say 2222) and configure the corresponding Istio Gateway to accept HTTP traffic. We chose to stick with port 80 because we already use it for normal HTTP traffic and SSH traffic is going to be encrypted anyway.

- Configure the publicly exposed port on Gateway to accept CONNECT type requests. We found this is already enabled for requests to Port 80. For any other port, you can apply an Envoy Filter like so:

E.g. Enable CONNECT on Port 2222

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

spec:

configPatches:

- applyTo: NETWORK_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: envoy.filters.network.http_connection_manager

portNumber: 2222

patch:

operation: MERGE

value:

typed_config:

'@type': >-

type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

http2_protocol_options:

allow_connect: true

upgrade_configs:

- upgrade_type: CONNECT

workloadSelector:

labels:

app: tfy-istio-ingress

- For each SSH Container configure CONNECT based routing:

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: svc-a-ns-ssh-envoy-filter

namespace: istio-system

spec:

configPatches:

- applyTo: NETWORK_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: envoy.filters.network.http_connection_manager

portNumber: 80

patch:

operation: MERGE

value:

typed_config:

'@type': >-

type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

route_config:

name: local_route

virtual_hosts:

- domains:

- svc-a.ns.svc.cluster.local:80

name: svc-a-ns-ssh-vh

routes:

- match:

connect_matcher: {}

route:

cluster: >-

outbound|80||svc-a.ns.svc.cluster.local

upgrade_configs:

- connect_config: {}

enabled: true

upgrade_type: CONNECT

workloadSelector:

labels:

istio: tfy-istio-ingressThat's a very scary-looking YAML, but all we are doing is modifying the listener on port 80 on the Gateway to match for CONNECT request to svc-a.ns.svc.cluster.local:80 and route them to outbound|80||svc-a.ns.svc.cluster.local i.e. the port 80 of Kubernetes service svc-a.ns.svc.cluster.local where our OpenSSH server is waiting for SSH connections inside the Container.

Initiating CONNECT on SSH Client Side

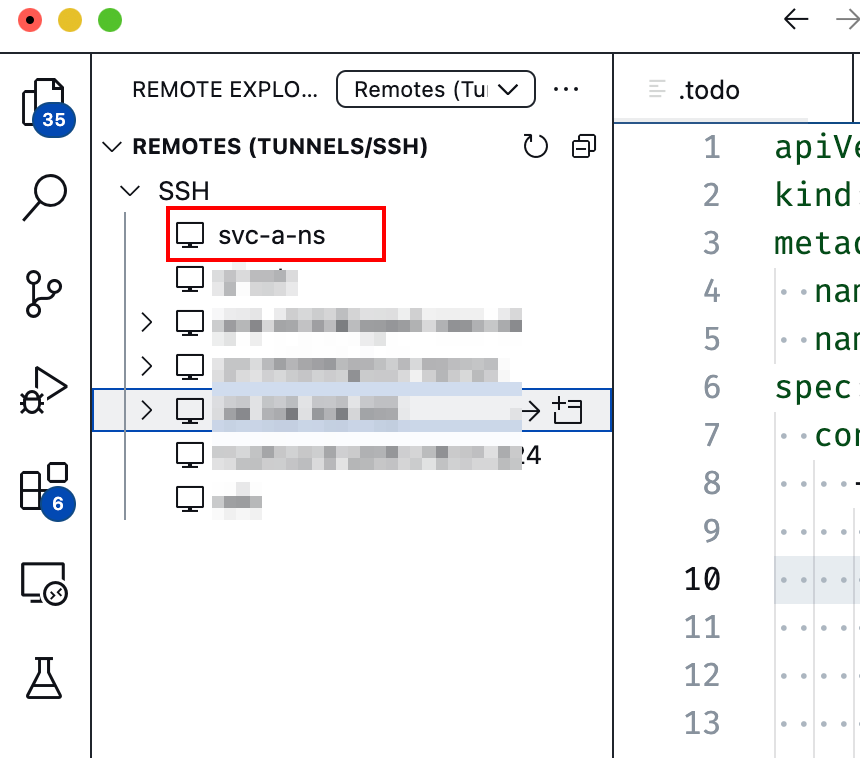

On its own, the SSH client knows nothing about HTTP CONNECT. Instead, it offers a ProxyCommand option which allows other programs to facilitate the SSH Connection. Here we use the ProxyTunnel project that makes this easy. The config in ~/.ssh/config looks like follows

Host svc-a-ns

User jovyan

HostName svc-a.ns.svc.cluster.local

Port 80

ServerAliveInterval 100

IdentityFile ~/.ssh/my-private-key

ProxyCommand proxytunnel -v -p ssh.acmecorp.com:80 -o %h -d %h:%pWith all that done, users can now easily connect and set up their favourite dev workflow - be it Neovim, VS Code, JetBrains IDE, etc

Limitations & Potential Solutions

While this feature greatly enhances the Developer Experience in terms of code editing and execution, some limitations still apply because we are still running inside a container.

- Docker does not work because we are already inside a container. Theoretically, it is possible to get a few things working with DIND, but it comes with its challenges.

- Changes made to the root file system of the container

/are not persistent across container restarts. We provide a way to extend our SSH Server image and start from those custom images. - Kubernetes Pods are meant to be ephemeral and can be moved around, but that is undesirable for a development environment. We configure pod disruption budgets to prevent the pod from being moved around.

- Even though the proxying is transparent, the traffic is still flowing through the load balancer and Istio Envoy pods. That means doing something weird in development like uploading/downloading huge files can eat up bandwidth and resources and affect other traffic. It is best to use a separate set of LoadBalancer, Gateway, Envoy pods for connecting to SSH Containers.

SSH server containers on Kubernetes at TrueFoundry

TrueFoundry is a ML/LLM deployment PaaS over Kubernetes to speed up developer workflows while allowing them full flexibility in testing and deploying models while ensuring full security and control for the Infra team. Through our platform, we enable teams to deploy and monitor models in 15 minutes with 100% reliability, scalability, and the ability to roll back in seconds - allowing them to save cost and release Models to production faster, enabling real business value realisation.