Enabling the Large Language Models Revolution: GPUs on Kubernetes

🚀 Why GPUs?

Large language models like ChatGPT and diffusion models like Stable Diffusion have taken the world by storm in just under a year. More and more organisations are now beginning to leverage Generative AI for their existing and exciting new use cases. While most companies can directly start using APIs provided by companies like OpenAI, Anthropic, Cohere, etc, these APIs also come with a hefty cost. In the long term, many companies would like to finetune small to mid-size versions of equivalent open-source LLMs like Llama, Flan-T5, Flan-UL2, GTP-Neo, OPT, Bloom, etc. as Alpaca and GPT4All projects did.

Finetuning smaller models with outputs from larger models can be useful in multiple ways:

- Models can be aligned better with the target use case.

- A fine-tuned model can act as a stronger base model for other models in the organisation.

- Easier and cheaper to serve if the size is smaller.

- Allows user data privacy during inference time.

To enable all this, GPUs have become an essential workhorse in any company working with these foundational models. With model sizes growing and reaching trillions of parameters distributed training over multiple GPUs is slowly becoming the new norm. Nvidia is leading the hardware space with their newer Ampere and Hopper series cards. High-speed NVLink and Infiniband interconnect allow connecting up to 256 Nvidia A100 or Nvidia H100 (and ~4k in super pod clusters) to train and infer with ever-larger models in record times.

👷 Using GPUs with Kubernetes

We'll now walk over the components needed to use GPUs with Kubernetes - mainly on AWS EKS and GCP GKE (Standard or Autopilot) but the mentioned components are essential on any K8s cluster.

Provisioning the GPU Nodepools

Your cloud provider has GPU VMs, how do we bring them to the K8s cluster? One way is to manually configure GPU Nodepools of fixed size or with cluster autoscaler that can bring in GPU nodes as and when needed and let go when not. However, this still needs manual configuration of several different node pools. An even better solution is to configure Auto Provisioning systems like AWS Karpenter or GCP Node Auto Provisioners. We talk about these in our previous article: Cluster Autoscaling for Big 3 Clouds ☁️

Sample Karpenter config

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: gpu-provisioner

namespace: karpenter

spec:

weight: 10

kubeletConfiguration:

maxPods: 110

limits:

resources:

cpu: "500"

requirements:

- key: karpenter.sh/capacity-type

operator: In

values:

- spot

- on-demand

- key: topology.kubernetes.io/zone

operator: In

values:

- ap-south-1

- key: karpenter.k8s.aws/instance-family

operator: In

values:

- p3

- p4

- p5

- g4dn

- g5

taints:

- key: "nvidia.com/gpu"

effect: "NoSchedule"

providerRef:

name: default

ttlSecondsAfterEmpty: 30Sample GCP Node Auto Provisioner config

resourceLimits:

- resourceType: 'cpu'

minimum: 0

maximum: 1000

- resourceType: 'memory'

minimum: 0

maximum: 10000

- resourceType: 'nvidia-tesla-v100'

minimum: 0

maximum: 4

- resourceType: 'nvidia-tesla-t4'

minimum: 0

maximum: 4

- resourceType: 'nvidia-tesla-a100'

minimum: 0

maximum: 4

autoprovisioningLocations:

- us-central1-c

management:

autoRepair: true

autoUpgrade: true

shieldedInstanceConfig:

enableSecureBoot: true

enableIntegrityMonitoring: true

diskSizeGb: 100Note here we can also configure our provisioners to use spot type instances to get anywhere from 30-90% cost savings for stateless applications.

Adding Nvidia Drivers

For any virtual machine to use GPUs, its drivers need to be installed on the host. Fortunately, on both AWS EKS and GCP GKE nodes are preconfigured with certain versions of Nvidia Drivers.

Because each newer CUDA version requires a higher minimum driver version, you might even want to control the driver version for all nodes. This can be done by provisioning nodes with custom images that do not have the driver and letting Nvidia gpu-operator install a specified version. However, this may not be allowed on all cloud providers, so be aware of the driver versions on your nodes to avoid compatibility headaches.

We talk about the gpu-operator later below.

Making GPUs accessible to Pods

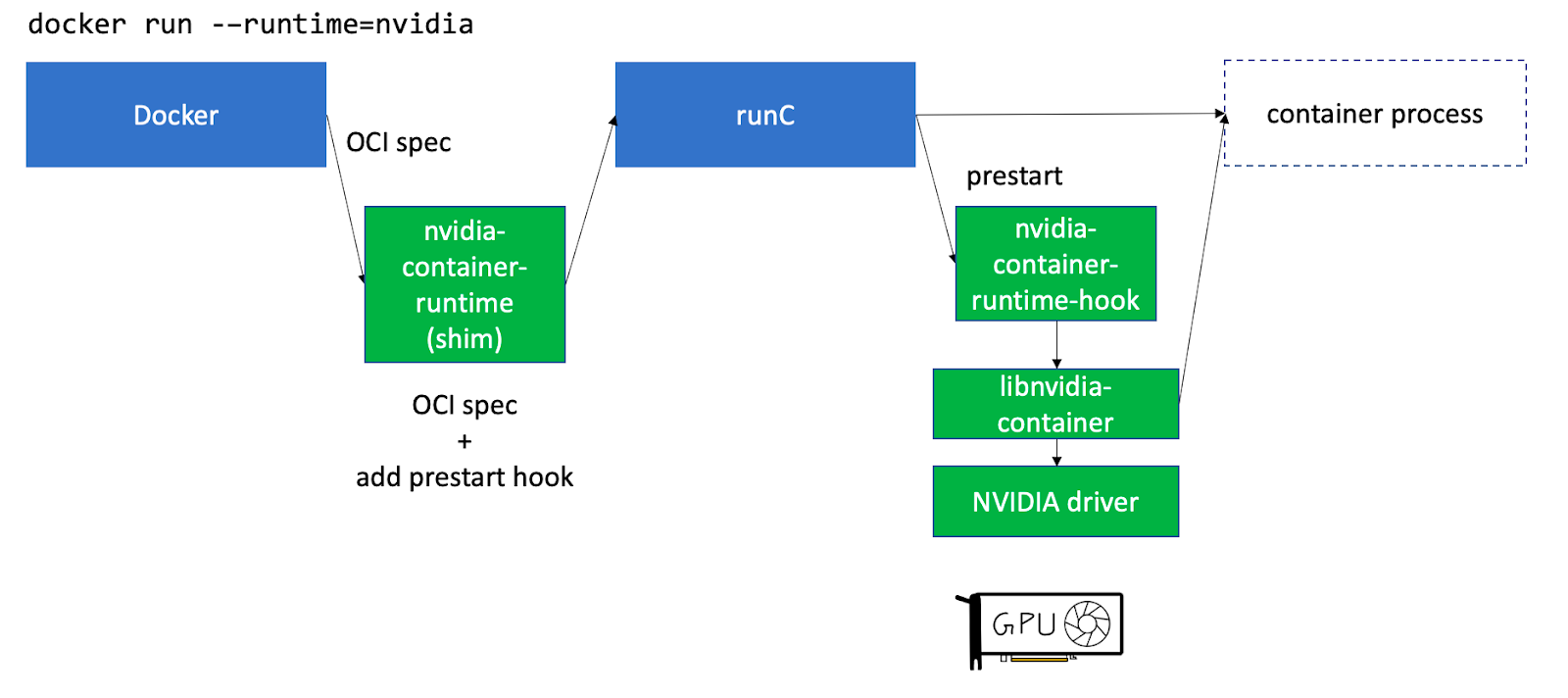

In Kubernetes since everything runs inside pods (a set of containers) just installing drivers on the host is not enough. Nvidia provides a standalone component called nvidia-container-toolkit that installs hooks to containerd runC to make the host's GPU drivers and devices available to the containers running on the node. See this article for a more detailed explanation.

nvidia-container-toolkit installs hooks to provide containers GPUs Sourcenvidia-container-toolkit can be installed to be run as Daemonset on the GPU nodes.

Making Nodes advertise GPUs

Having GPUs on the node is not enough, the Kubernetes scheduler needs to know which node has how many GPUs available. This can be done using a Device Plugin. A device plugin allows advertising custom hardware resources to the control plane e.g. nvidia.com/gpu . Nvidia has published a device plugin that advertises allocatable GPUs on a node. This plugin again can be run as a Daemonset.

Putting Pods on GPU Nodes

Once the above components are configured we need to add a few things to the pod spec to schedule it on the GPU node - mainly resources , affinity and tolerations

E.g. on GCP GKE we can do:

spec:

# We define how many gpus we want for the pod

resources:

limits:

nvidia.com/gpu: 2

# affinities help us place the pod on the GPU nodes

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

# Specify which instance family we want

- operator: In

key: cloud.google.com/machine-family

values:

- a2

# Specify which gpu type we want

- operator: In

key: cloud.google.com/gke-accelerator

values:

- nvidia-tesla-a100

# Specify we want a spot VM

- operator: In

key: cloud.google.com/gke-spot

values:

- "true"

tolerations:

# Spot VMs have a taint, so we mention a toleration for it

- key: cloud.google.com/gke-spot

operator: Equal

value: "true"

effect: NoSchedule

# We taint the GPU nodes, so we mention a toleration for it

- key: nvidia.com/gpu

operator: Exists

effect: NoSchedule- We specify how many GPUs we want in the

resources.limitssection - We specify our node requirements - what instance family, what GPU type, and whether the node should be a spot instance or not. We can even add region and topology preferences here.

- And we specify corresponding tolerations because our nodes are usually tainted to make sure only pods that need GPUs and can handle interruptions will be put on such nodes

Note that these configurations will differ based on what provisioning methods and cloud providers you use (e.g. Karpenter on AWS vs NAP on GKE)

Getting GPU Usage Metrics

Monitoring GPU metrics like Utilisation, Memory Usage, Power Draw, Temperature, etc is important to ensure things are working smoothly as well as to do further optimisations.

Fortunately, Nvidia has a component called dcgm-exporter that can run as Daemonset on GPU nodes and publish metrics at an endpoint. These metrics can then be scraped with Prometheus and consumed. Here is an example scrape config:

- job_name: gpu-metrics

scrape_interval: 15s

scrape_timeout: 10s

metrics_path: /metrics

scheme: http

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- <dcgm-exporter-namespace-here>

relabel_configs:

- source_labels: [__meta_kubernetes_pod_node_name]

action: replace

target_label: kubernetes_node

However, note that dcgm-exporter needs to run with hostIPC: true and privileged securityContext. This is fine for EKS and GKE Standard. However, GKE Autopilot does not allow such access, instead, GKE publishes metrics on the preconfigured nvidia-device-plugin Daemonsets which can be scraped or viewed in GCP Cloud Monitoring.

Summary

| AWS EKS | GCP GKE Standard |

GCP GKE Autopilot |

|

|---|---|---|---|

| Provisioning | Karpenter / Manual |

GCP Node Auto Provisioner / Manual |

Auto Provisioning |

| Drivers | Preinstalled/Install via gpu-operator |

Preinstalled | Preinstalled |

| Container Toolkit | nvidia-container-toolkit via gpu-operator |

Preconfigured | Preconfigured |

| Device Plugin | nvidia-device-plugin via gpu-operator |

Preconfigured Daemonset | Preconfigured Daemonset |

| Metrics | nvidia-dcgm-exporter via gpu-operator |

Standalone nvidia-dcgm-exporter / Custom scraping |

Custom Scraping |

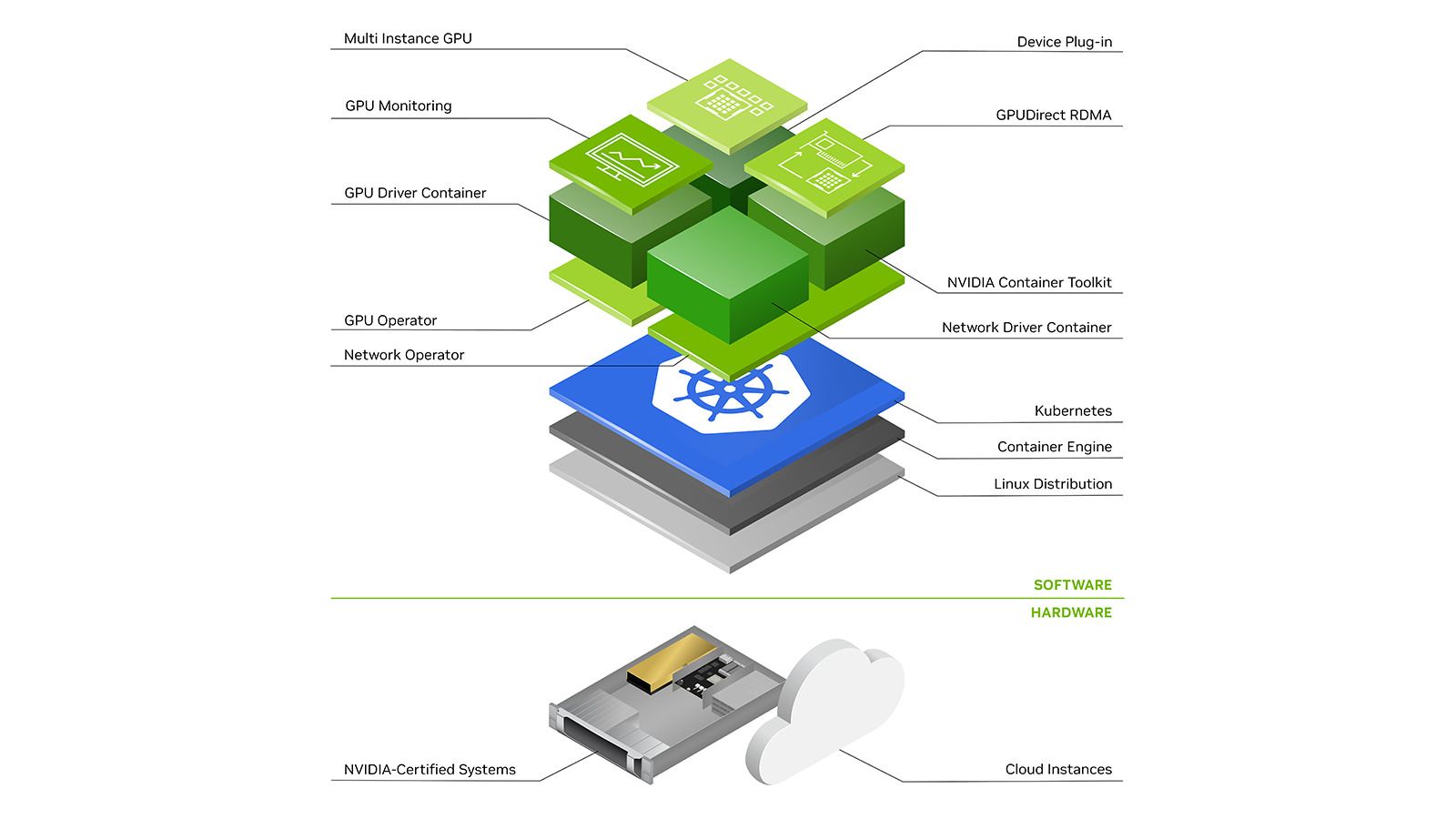

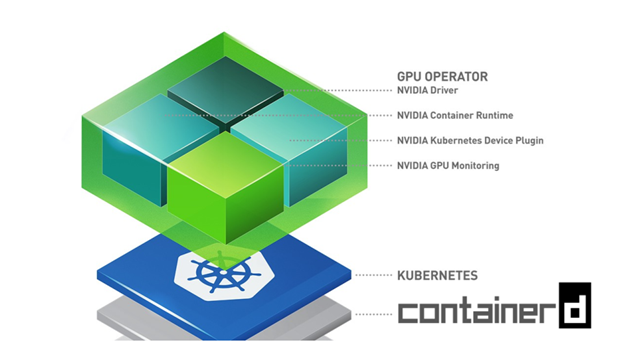

Nvidia's GPU Operator

The gpu-operator mentioned above for most parts on AWS EKS is a bunch of standalone Nvidia components like drivers, container-toolkit, device-plugin, and metrics exporter among others, all combined and configured to be used together via a single helm chart. The gpu-operator runs a master pod on the control plane which can detect GPU nodes in the cluster. On detection of a GPU node, it deploys a worker Daemonset that further schedules pods to optionally install drivers, container toolkit, device-plugin, CUDA toolkit, metrics exporter and validators. You can read more about it here.

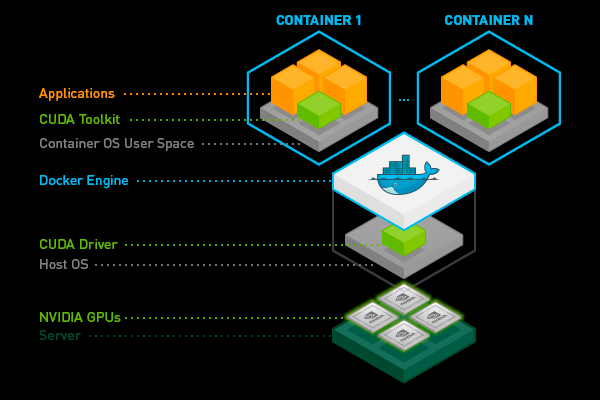

A note on CUDA Toolkit

Generally, it is possible to have the CUDA toolkit installed on the host machine and have it made available to the pod via volume mounting, however, we find this can be quite brittle as it requires fiddling with PATH and LD_LIBRARY_PATH variables. Moreover, all pods on the same node have to use the same CUDA toolkit version which can be quite restrictive. Hence it is better to put the CUDA toolkit (or just parts of it) inside the container image.

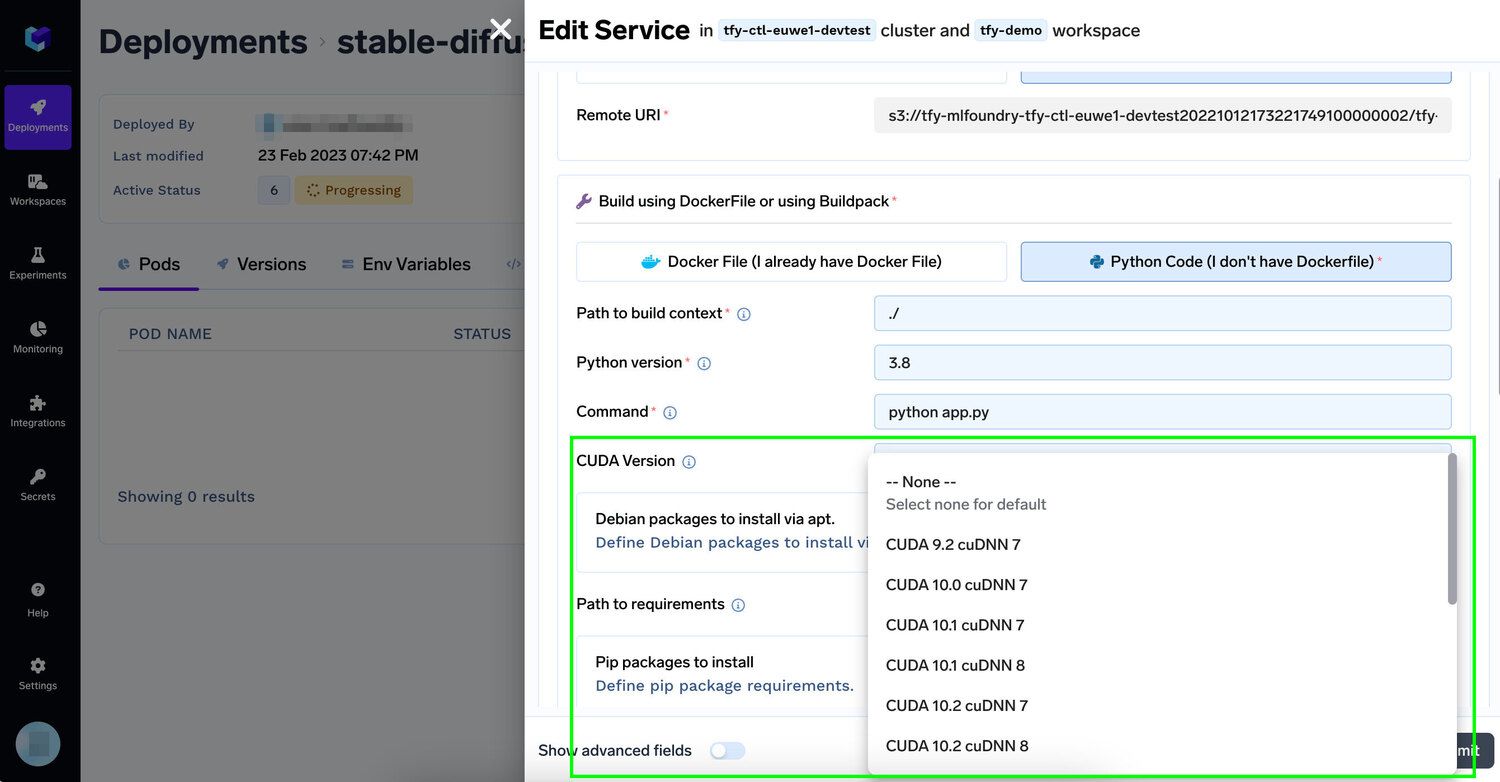

You can start from already-built images provided by Nvidia or your favourite deep-learning framework or add it using one line on the Truefoundry platform

✨ Using GPUs with Truefoundry

To enable organisations to fine-tune and ship their Generative AI models faster on their existing infrastructure, the Truefoundry Platform allows developers to add one or more Nvidia GPUs to their applications with minimal effort. Developers only need to specify how many instances of some of the best GPUs for Machine Learning like V100, P100, A100 40GB, A100 80GB (optimal for training) or T4, A10 (optimal for inference) they need and we do the rest. Read more on our docs.

🔮 Looking forward

GPUs are a fantastic technology and this is just the beginning for us. We are actively working on the following problems:

- GPUs are quite expensive, so monitoring costs and "rightsizing" the GPU assignments and bin packing as much as possible is important.

- While Generative AI use cases might require insanely powerful multi-GPU training not all models need a complete GPU. Vanilla Kubernetes only allows complete assignments of one GPU to any pod, we want to provide GPUs in smaller than 1 unit with technologies like Nvidia MPS and MIG.

- GPUs are selling like hotcakes right now and contrary to popular belief - the cloud is not infinitely scalable (at least with GPU quotas), it is important to leverage quotas in multiple regions.

- GPUs are an important part of the story but not the only part, we want our users to bring/move their data and models around easily.

- Scaling to a high number of nodes introduces new networking, i/o, K8s control plane, etcd scaling and other engineering challenges to solve.

If any of this sounds exciting, please reach out to work with us to build the best MLOps platform.

TrueFoundry is a ML Deployment PaaS over Kubernetes to speed up developer workflows while allowing them full flexibility in testing and deploying models while ensuring full security and control for the Infra team. Through our platform, we enable Machine learning Teams to deploy and monitor models in 15 minutes with 100% reliability, scalability, and the ability to roll back in seconds - allowing them to save cost and release Models to production faster, enabling real business value realisation.